22 - Uniform continuity

Where we left off last time

What all did we say about continuity last time, and where were we going?

-

Continuity (recalling different characterizations of continuity): To say "the function is continuous" means the following:

-

Closeness (metric definition): Close enough points map to close points. At each , for every , there is a such that , implies .

Remark. If I give you an , then you can find me a such that anytime two points start within of each other they map to within of each other.

-

Limit preservation (sequence definition): The function preserves limits of sequences. For every convergent sequence in , .

Remark. If you have a continuous function, then that means that the limit of the image of a convergent sequence is still convergent, and it converges to the limit.

-

Openness (open sets definition): Inverse images of open sets are open.

Remark. This is a completely topological definition of continuity.

-

Closedness (closed sets definition): Inverse images of closed sets are closed. If is closed in , then is closed in .

Remark. This is intimately related to the result concerning open sets.

-

-

Consequences of open set characterization of continuity: We established the following results from last time due to the way we characterized continuity in terms of open sets:

- Compositions: The composition of continuous functions is continuous.

- Images and compactness: The image of a compact set is compact.

- Achieving maximums and minimums: A continuous real-valued function on a compact set must achieve its minimum and maximum.

Uniform continuity

What is uniform continuity? Why is it useful and what results can we make about it?

-

Uniform continuity (definition): Call uniformly continuous on if for all there exists such that for all and for all , then implies that .

This looks a lot like the definition of continuity, except that we're saying that the same works for every . You're given some , and the same works for every in . For "regular continuity," recall that the point is specified before you even start the definition; that is, you are considering continuity at a point when looking at regular continuity, and when you say something is continuous everywhere what you mean is for each point for every there exists a such that blah blah, whereas now we're saying for every there is a that works for each point.

That's what it means to be uniform. It's like, if we're all wearing the same uniform, then we're wearing the same thing. So we have the same . We're all wearing the same .

-

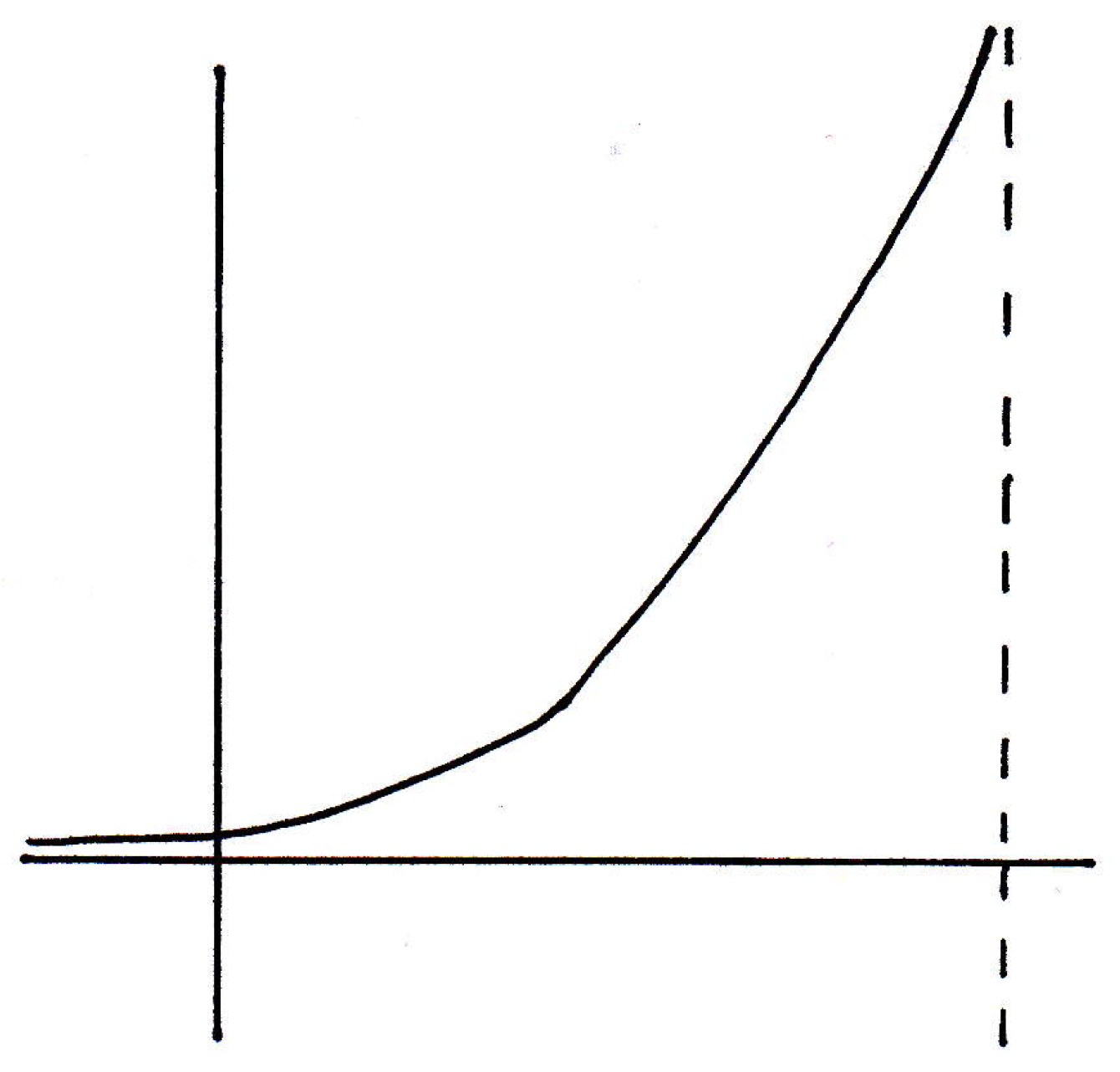

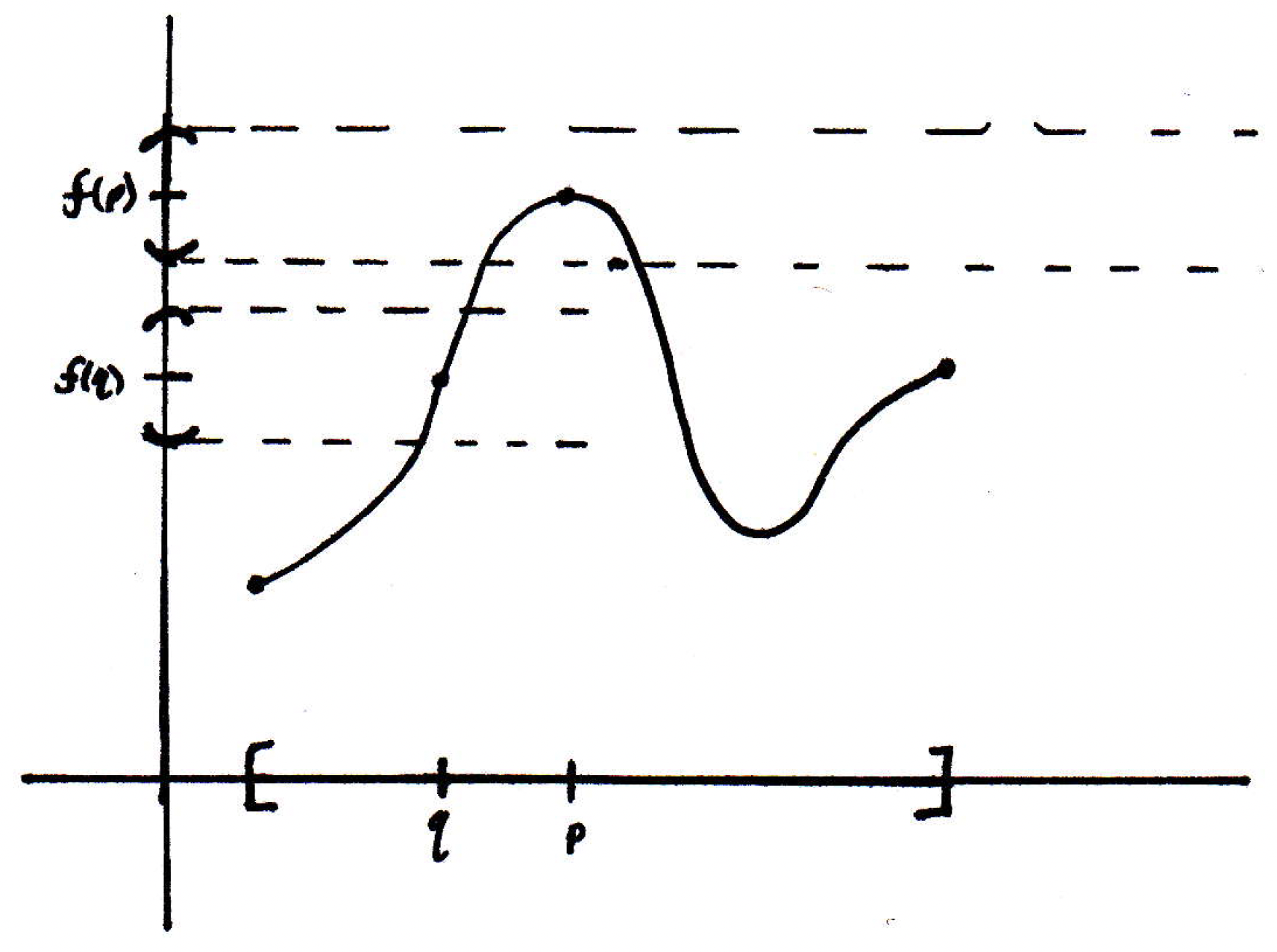

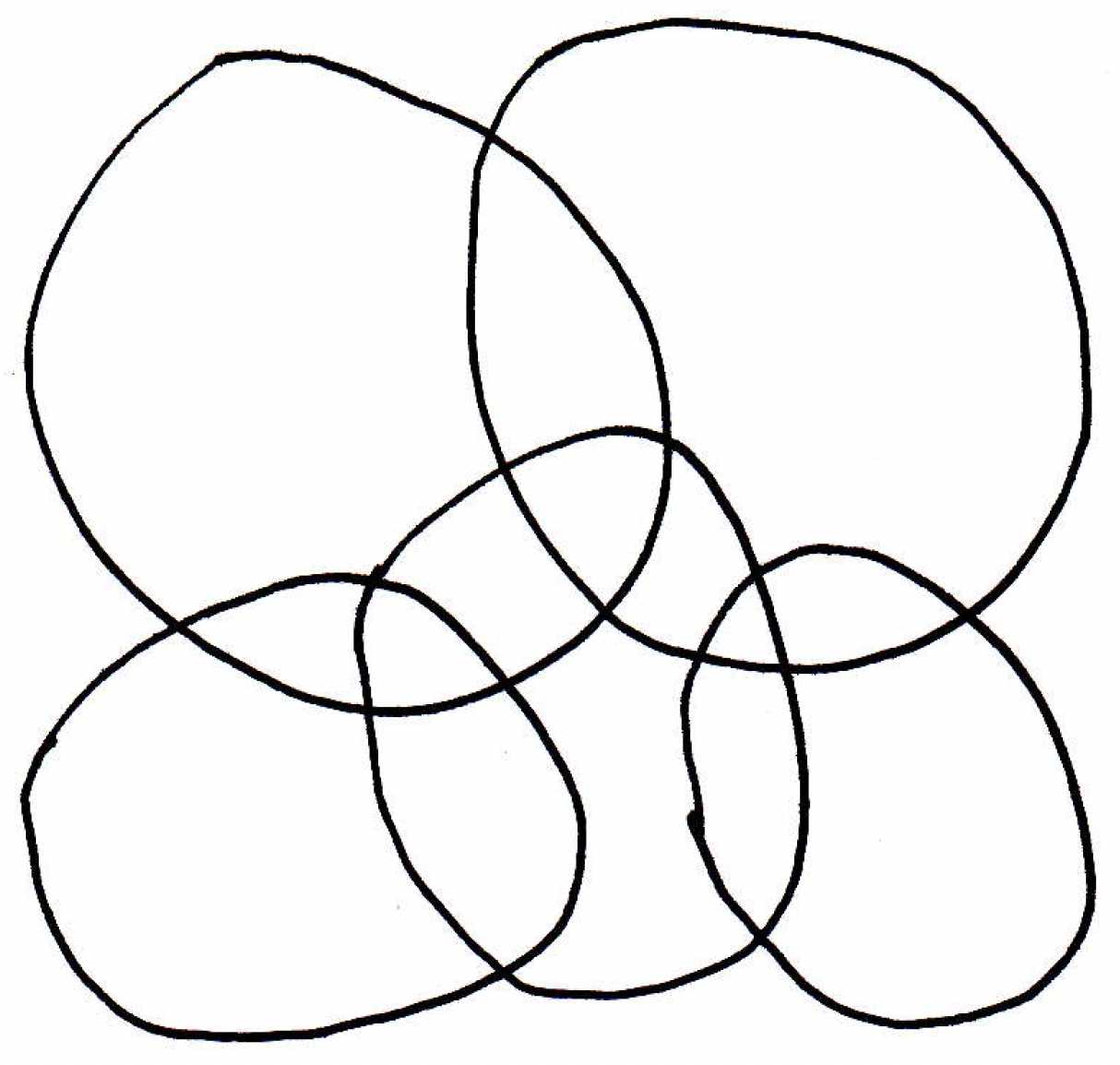

A clearly not uniformly continuous function: Consider the following function:

Clearly this is not a uniformly continuous function. Why? Consider points and with a specified -ball around and another specified -ball around :

Then the -ball that will land in the -ball around is actually quite large. But what does it take to land in the same -ball around ?

Note: The -balls in the picture are not actually the exact same size and should be redrawn. When they are redrawn, the -ball around will still need to be much smaller than that around to ensure landing in the -balls around and .

That by itself, by checking two points and , and seeing that the -balls were different, doesn't necessarily make the given function not uniformly continuous. Right? Because you might say well the -ball around works for as well because it's smaller. But what's the difficulty? Will the -ball around work for all points? No. Which ones are you going to worry about? The points beyond to the right. The -ball around will no longer work because the function is doing something crazy. And why is it allowed to get away and do something crazy? Well, it's because the domain of the function was not closed to the right. If we had demanded that the function end at the vertical asymptote and be defined there, then this function could not have gone off to infinity and still be continuous. So we see in a very essential way the fact what uniform continuity is demanding of us. It's demanding the same work for every . So what's the big deal here? When are functions going to be uniformly continuous?

-

Theorem about continuous mapping with compact domain: Let be a continuous function, where is compact. Then is uniformly continuous on .

In the example given above, this theorem is pointing out where the problem is. It's bringing up the fact that if you had known the domain was compact, then we would have been in good shape. For example, if the domain had stopped right before it had gotten to the asymptote, then we'd still be okay. Why? What would work for every point? The that worked at the endpoint. That would work everywhere to the left. So this kind of gives a hint as to what is going on with the theorem.

Now, why is the theorem above so important? Why do we care so much about uniform continuity? Well, uniform continuity basically says the same works for every . It just means that for any target distance you name, I can tell you how close you have to be to land within . The upshot of it all is that it doesn't matter where you are. That's the important part. And the above theorem is saying that you have uniform continuity whenever you have continuity on a compact set.

-

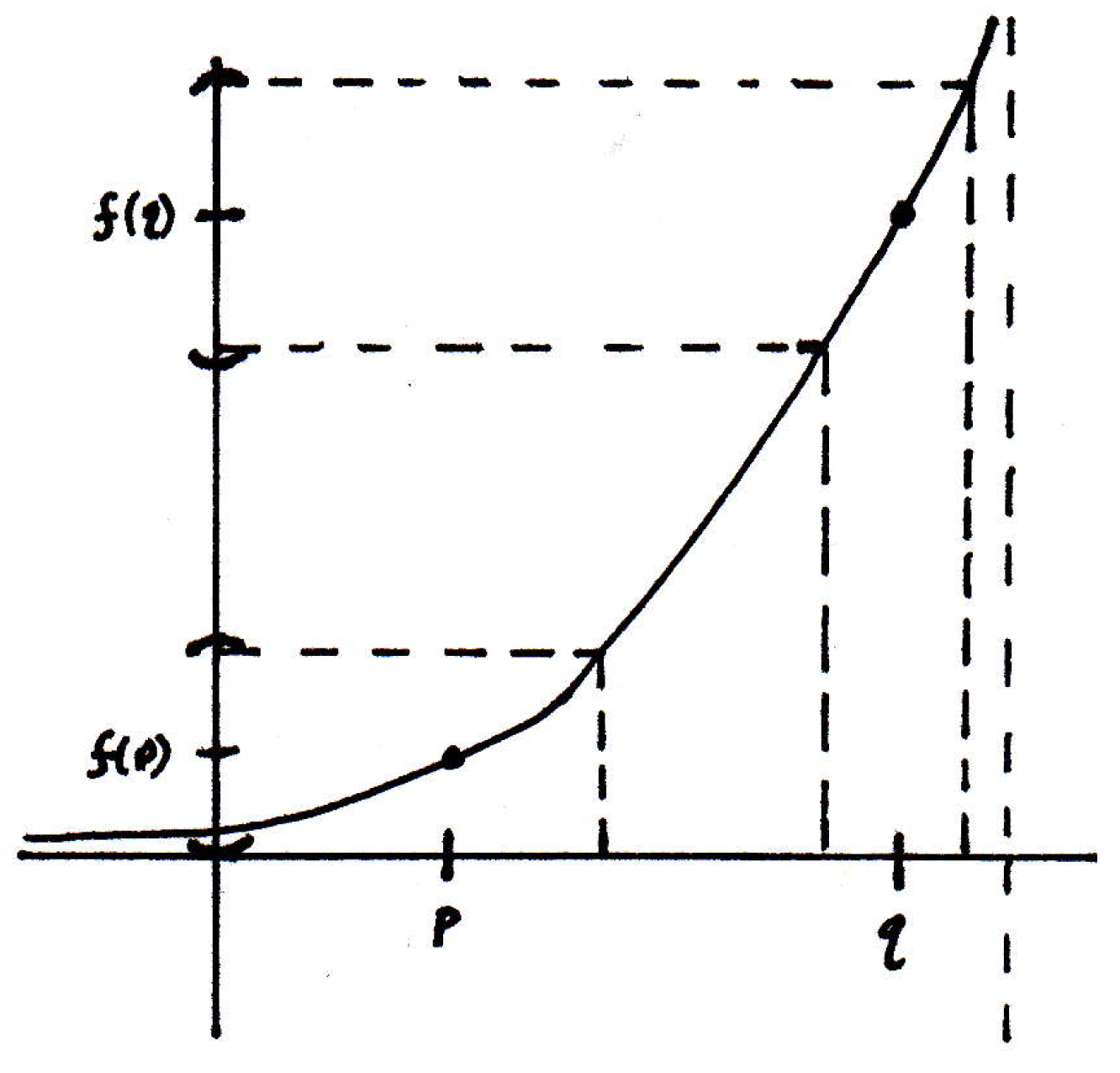

Proof of theorem above (idea): Let's see if we can develop some intuition as to why the theorem above is true. How is compactness going to help us here? Let's draw another picture, where we start with a compact set (note that the used in this intuition-developing example need not be a subset of the real line; it could be a subset of any metric space; it could be one-dimensional, two-dimensional, infinite dimensional, etc.):

So above is a compact set in the real line, and we have a function that's defined on it that's continuous:

What is going to work for every ? So maybe we consider points and again:

The -balls around and are the same, but the requisite -balls around and are obviously different. (The -ball around would have to be much smaller than the -ball around to ensure that the images are within of the curve .) We could go over a few places and find some -balls that would keep things within as well. So lots of different 's. What is going to work for every given ? The smallest one! Is there a smallest one? Not necessarily. There wasn't a smallest one in the previous example. (The -balls got smaller and smaller as we went to the right. How do we know the -balls in the current examples won't get smaller and smaller and smaller? What about the point at which the slope is greatest? Intuition is good there, but we have to be a little bit careful. If the function had a derivative, which we have not defined yet, and which also the function may not actually have (the only requirement is that it be continuous but not necessarily differentiable), but if we could talk about slopes, then it would certainly make sense perhaps to think that where the slope was greatest the requisite -ball would be smallest. Even this idea presents a slight problem; for example, has an infinite slope at the origin, and the idea of a "maximal slope" is a problematic notion. But it is a good intuition that somehow slope is related to this notion of closeness and everything.

-

Proof of theorem (book's idea): There many ways to prove the theorem in question. We'll give another one here than that from the book, but here's the idea from the book. Every point in the compact set has a -ball associated with it. Those -balls form an open cover of the open set which is compact; therefore, there is a finite subcover of the set. And the -balls don't necessarily guarantee anything. You have to be careful. And so the book starts off with balls bigger than you need and works out some nifty metric arguments to make everything work. It's worth reading and understanding, but we'll present a different proof that appeals to a famous lemma which we will also prove.

-

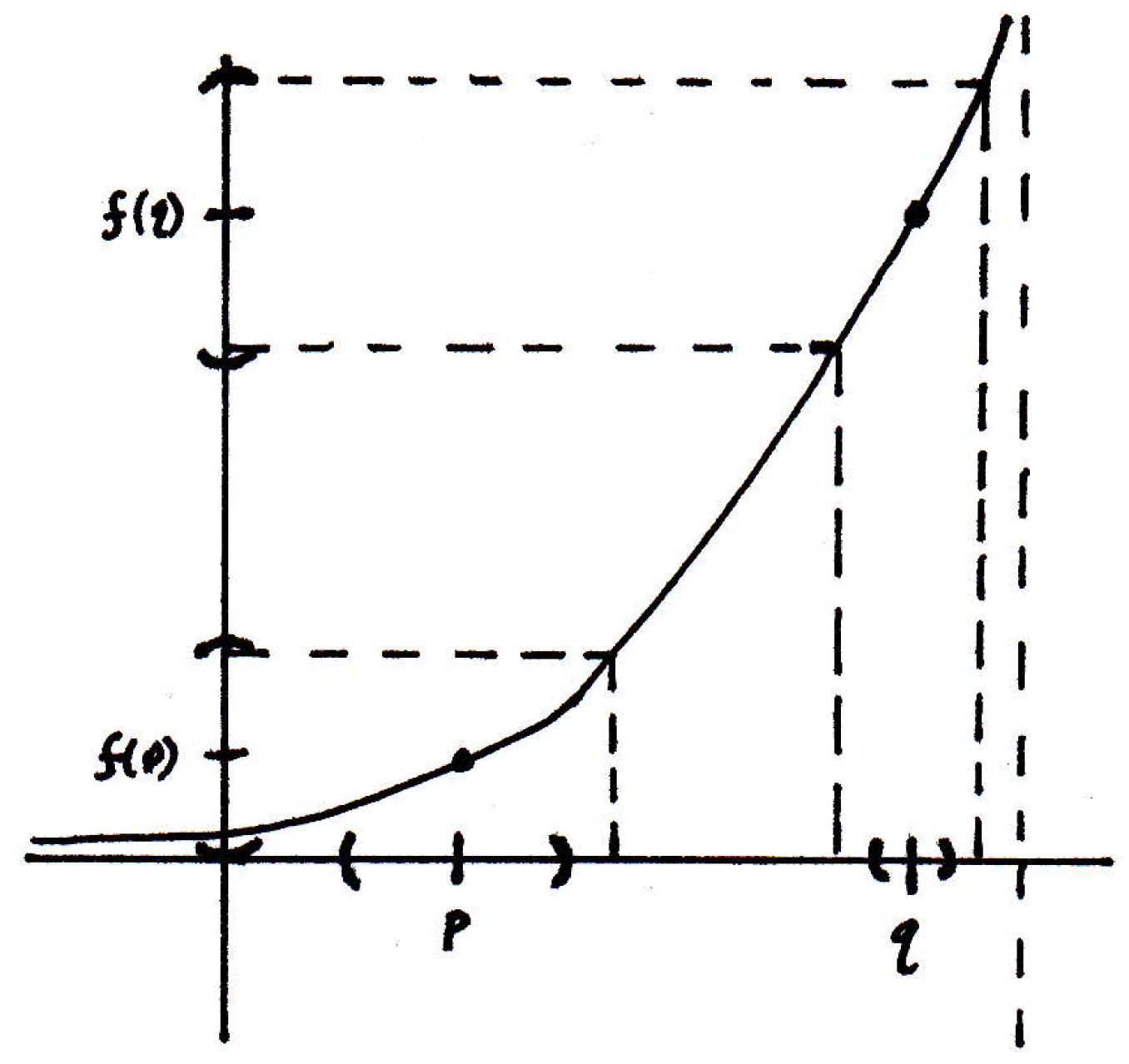

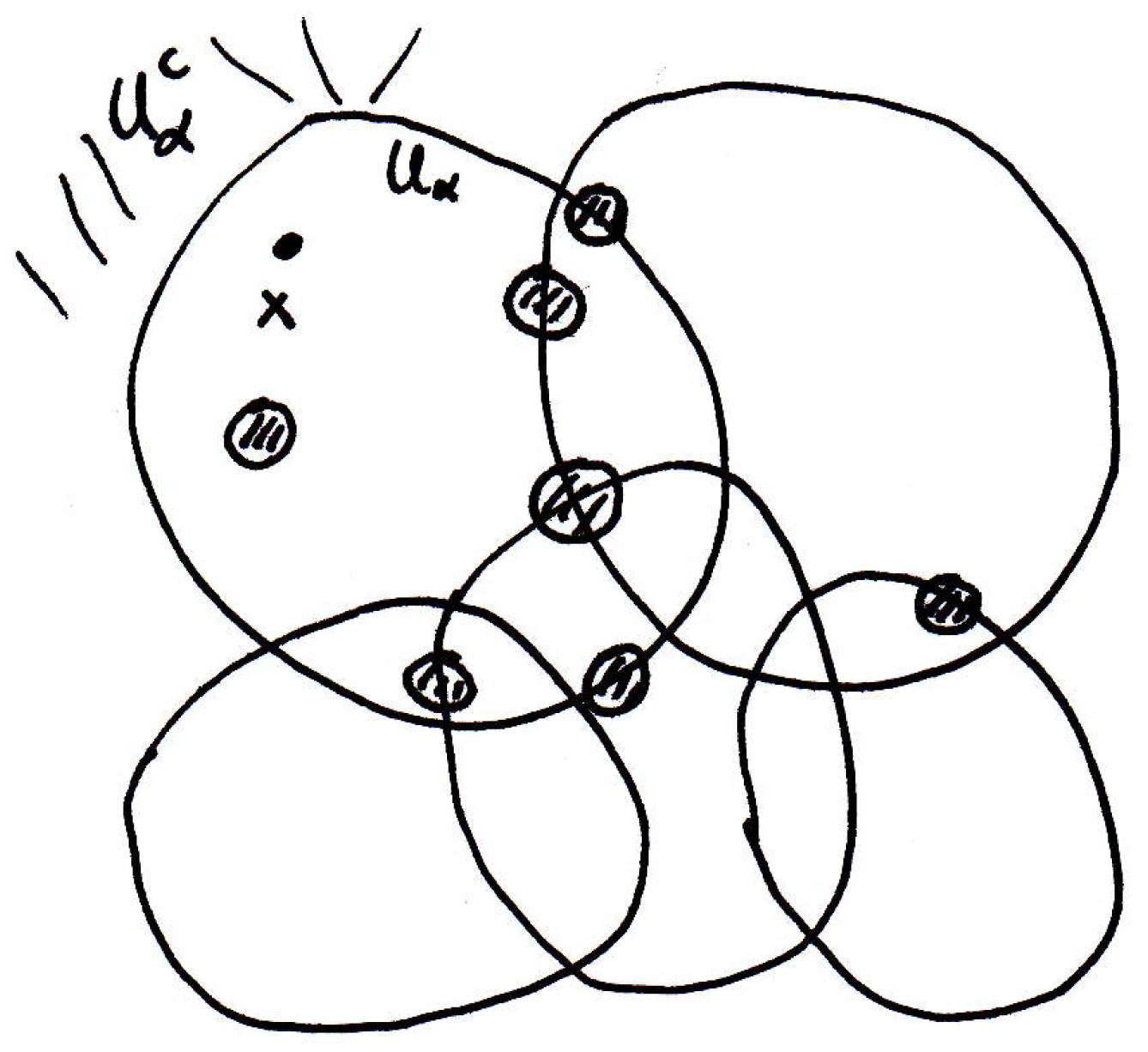

Proof of theorem: Given . [Our goal is to find a that will work for all points .] Each point has a -ball, and we'll call this a -ball because this ball may depend on , that satisfies the condition for continuity, namely that , where we will treat as a placeholder for now because we will see later what needs to be in order for our proof to work. You name a and we can make this true for a particular that may depend on . We'll see momentarily what should be.

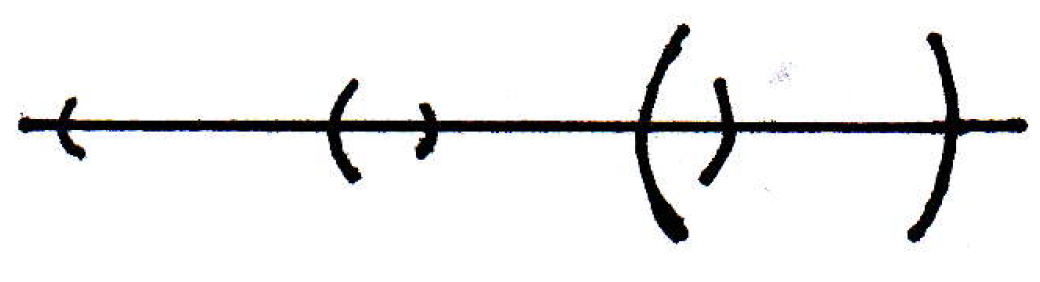

The -balls cover :

Here's a question: Would we be done if our argument if we could show that for a small enough distance that if you give me two points that are close enough anytime we thrown them down randomly in the picture above that they lie in one interval? Ought that be true? Is that necessarily true? (If we could answer this question, then we would be done as we will see momentarily.) That is, can we find a such that if then and are in the same element of the cover set (i.e., if and are in the same element, namely a cover set, from the open cover set as a whole)? Consider the following picture where you're given and :

Do they not both lie in one element of the cover set, namely the interval with medium-sized parentheses? If you could do that, then we could bound the distance between and by their distance to the center point of this cover:

If so, then we have that

since and (because they were in the same ball). If we want , then we need to make . So that's the choice we should make at the beginning for .

What do we have here? If I could find a that's small enough so that anytime two things and are within of each other they live in the same element of the cover set, then certainly they are within of each other. That's what the cover is that is explicitly drawn above. It is a -ball.

Is the whole conjecture from above true? Yes. It's such an important fact that it has its own name. It's called the Lebesgue covering lemma. It's an excuse to prove something that is not covered in Rudin but is used very very often in many contexts.

-

Lebesgue covering theorem: If is an open cover of a compact metric space , then there exists such that for all , is contained in some .

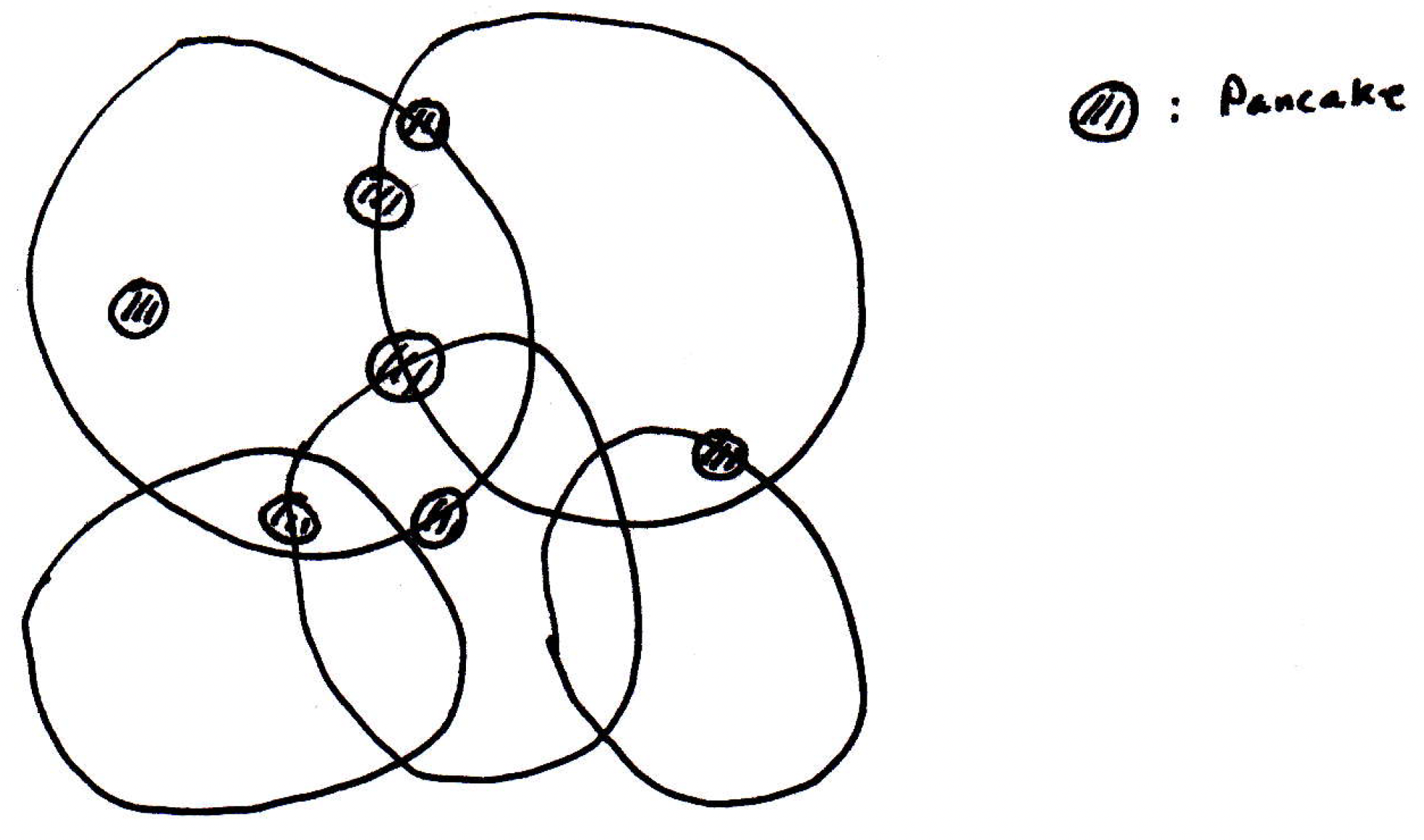

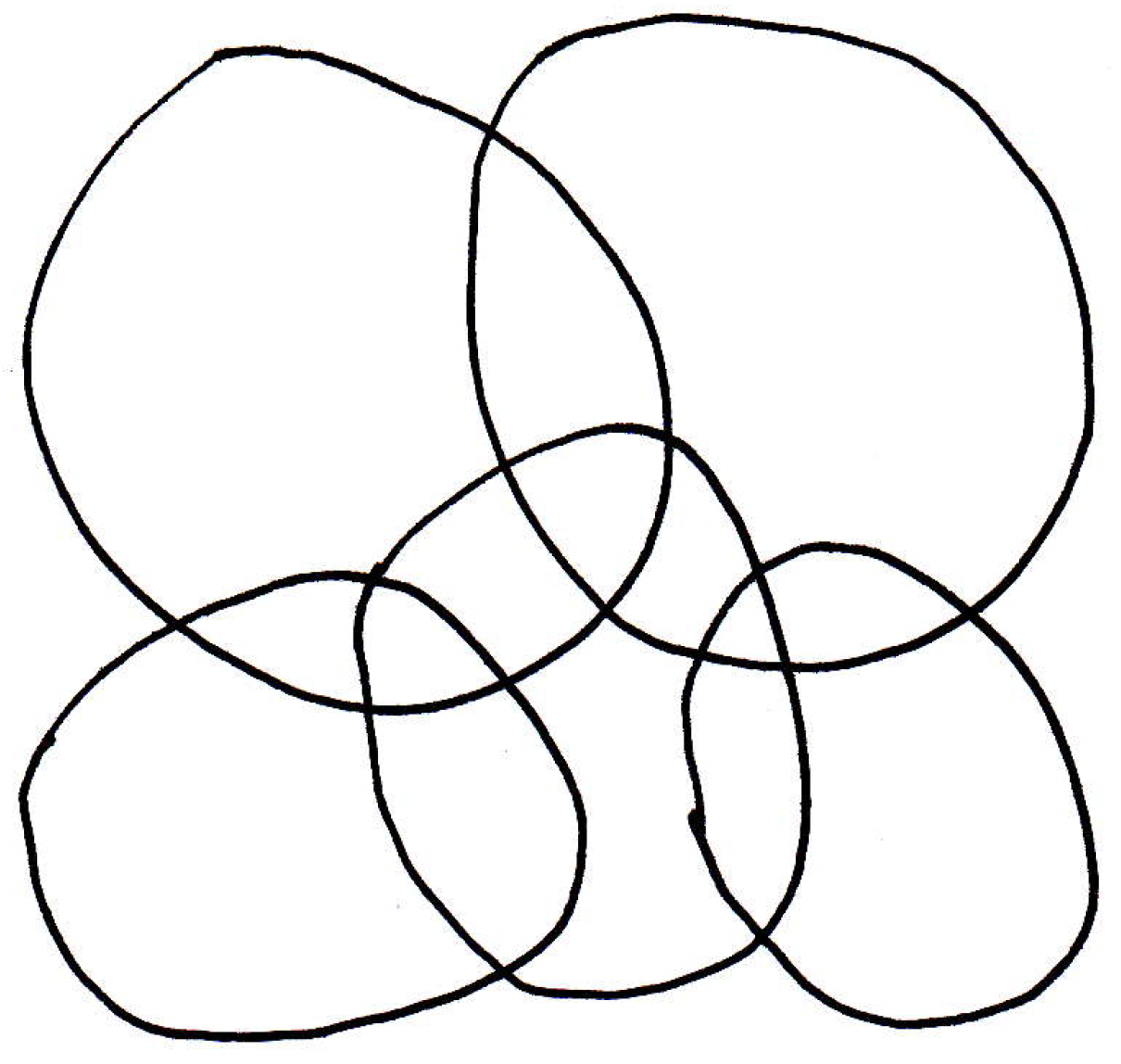

The referenced above has a special name. It's called the Lebesgue number of the cover. This Lebesgue number will exist and be bigger than 0 if the space is compact. The picture you should have in your head is something like the following where the space is covered by a bunch of sets like so:

The Lebesgue number is basically a number such that you give me a little pancake of radius , and if I throw that pancake anywhere in the picture, it will land completely within one of the sets (i.e., within one of the covering sets). If there is such a number , then it's called the Lebesgue number, and the claim is that if the space is compact, then there will exist such a number. In the picture above, we might have something like the following:

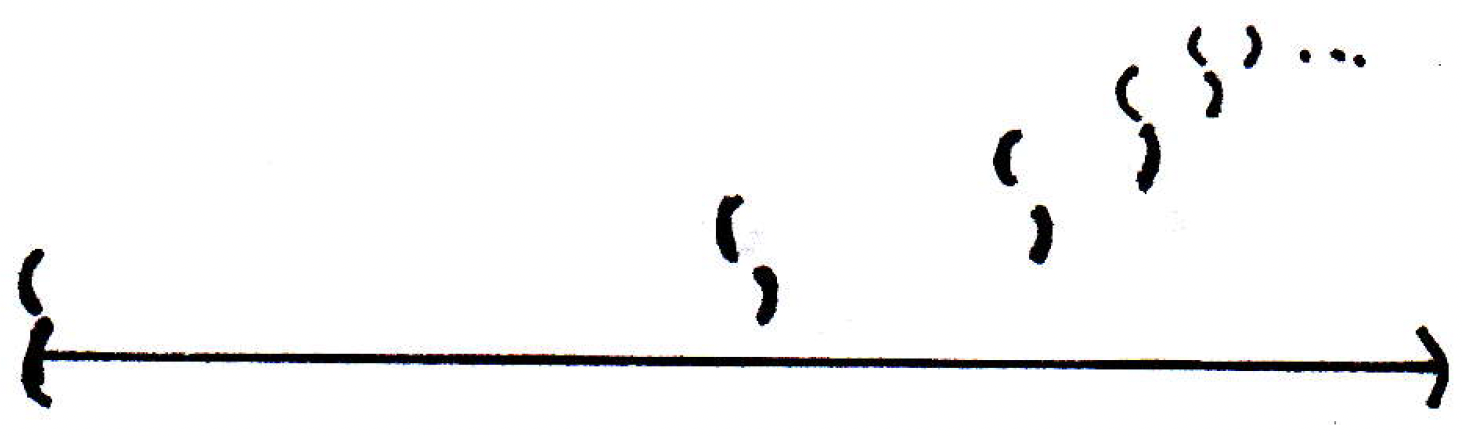

That is, we have a pancake of about the given size in the picture and no matter where we put it, it will lie completely in one element of the cover. Now, this would not necessarily be true if the space were not compact. Imagine the following space, the open interval where it were covered like so:

So the open interval is covered by many open sets that get smaller and smaller as you go to the right. Throw a pancake down of any size and you get into trouble as you go to the right. So the lemma does not hold if the space is not compact. And that is what we were basically saying before the statement of the lemma here.

If there's a pancake that you can throw down so that it lands completely within one of those -balls, that's the that we're interested in, then we're in good shape because we'll be able to complete the proof as desired. So let's see why the Lebesgue number exists.

-

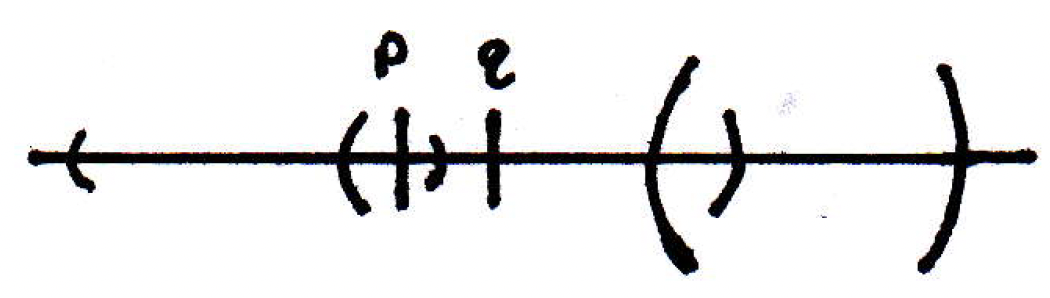

Lebesgue theorem (proof): How should we start here? The space is compact. Since is compact, there exists a finite subcover . So then what? We can remove all but finitely many of the balls shown above in the picture. That's nice.

If the covering sets of our compact space are open, then their complements are closed. Suppose we have a point in some :

Then all the space outside of , which is its complement , is closed. Then it should make sense to talk about defining a distance between and since is closed.

How should such a distance be defined? If a set is closed, then we can define . What can we say about this distance notion? We claim that is a continuous function of . Basically use triangle inequality and compare to some other close point . So then what?

If the pictured sets cover , then is an at least one of the covering sets. And so the distance between and the complement of the covering that is in is going to be nonzero. Now, if is not in a covering set, then the distance between and the complement of the set that is not in will be 0. So we only have finitely many covering sets. Let's look at the distance between and all of the complements of the covering sets. What would it mean if we found a Lebesgue number? What would that mean? If we could throw pancakes down in our original picture and have them land in a set, then that's really saying that the distance from to one of the complements is bigger than . So if we could show that for at least one of the sets that there is a -ball around a point that separates it from a complement by a minimum distance . Then we should be in good shop. So let's look at all distances between and the complement of the covering set that is in. So let's look at . There's one for every . Now, we're hoping to show that the minimum of all the is bigger than 0 for every . We could add up all the distances and divide by (so taking the average) to get a function of :

What can we say about the function that is the average distance from to the complements? It must be continuous because it's the sum of continuous functions. What else? It's defined on a compact set. So it's a continuous function on a compact set. Thus, by a theorem we discussed recently, it must attain its maximum and minimum. What are we concerned worth? The minimum value. So attains its minimum value. Let's call it . What would it mean for the average of a bunch of distance to be at least ? It would mean one of them is at least . And that's all we need. And that's the set that the pancake falls in. So if , then at least one of , so for this , we have .

For the above, why is ? The fact that

is a cover means that for every it's in one of the covering sets. Thus, our function has to be nonzero everywhere. So its minimum value has to be bigger than 0. That is, because at each since the are a cover, and note here that we are really using the fact that attains its minimum value because you may worry that even if then its infimum may be 0, but the infimum cannot be zero here because achieves its minimum somewhere, and that minimum is bigger than 0.

Connectedness and its relation to continuity

How is connectedness related to continuity?

-

Continuity preserves: We've seen that continuity preserves lots of different things. Continuity preserves limits. It preserves compactness. (The image of a compact set is compact.) Continuity also preserves connected sets. So if you take the image of a connected set, then the image is connected.

-

Theorem concerning images of connected sets being connected: If is continuous, and is a connected subset of , then is connected.

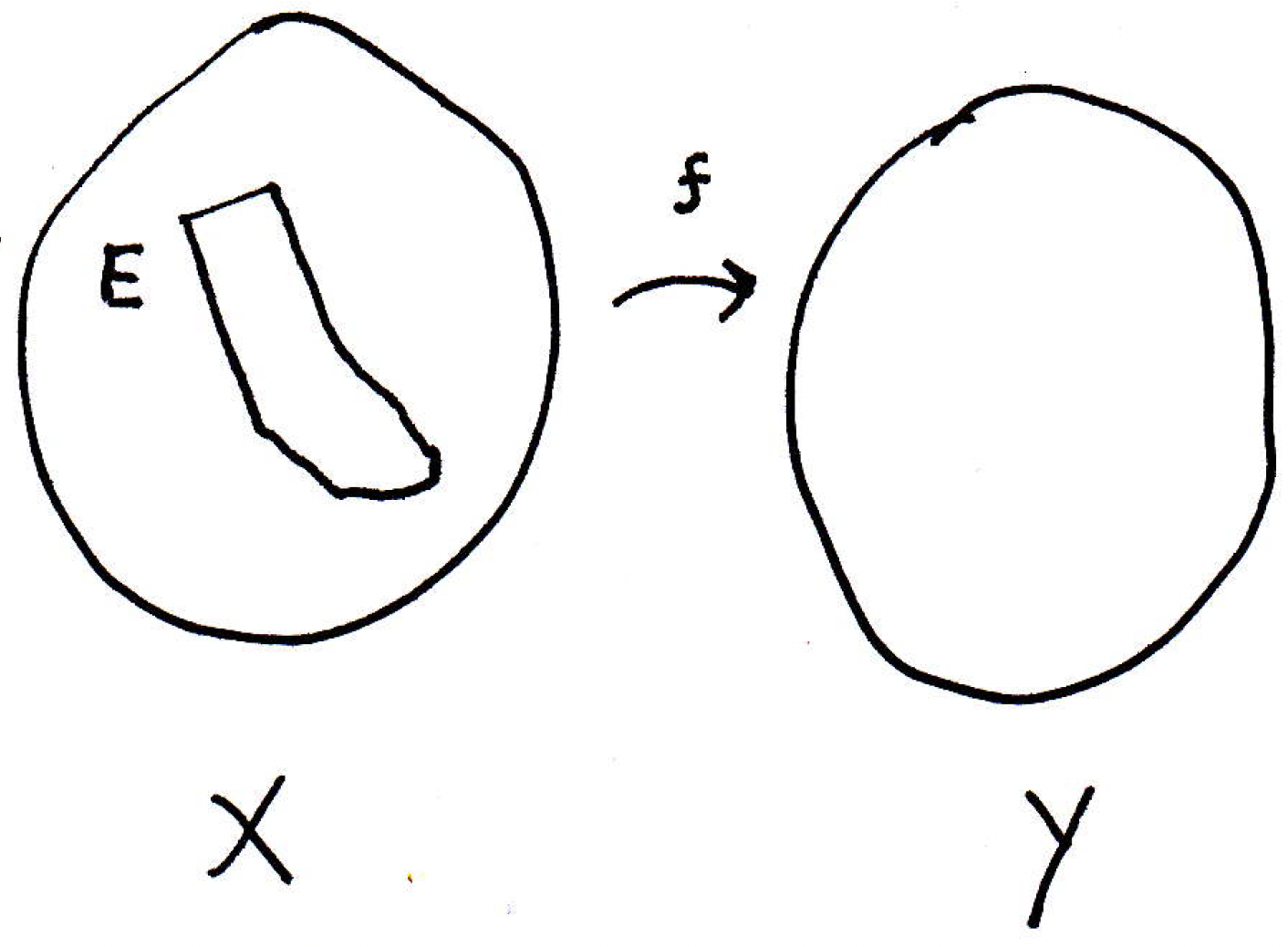

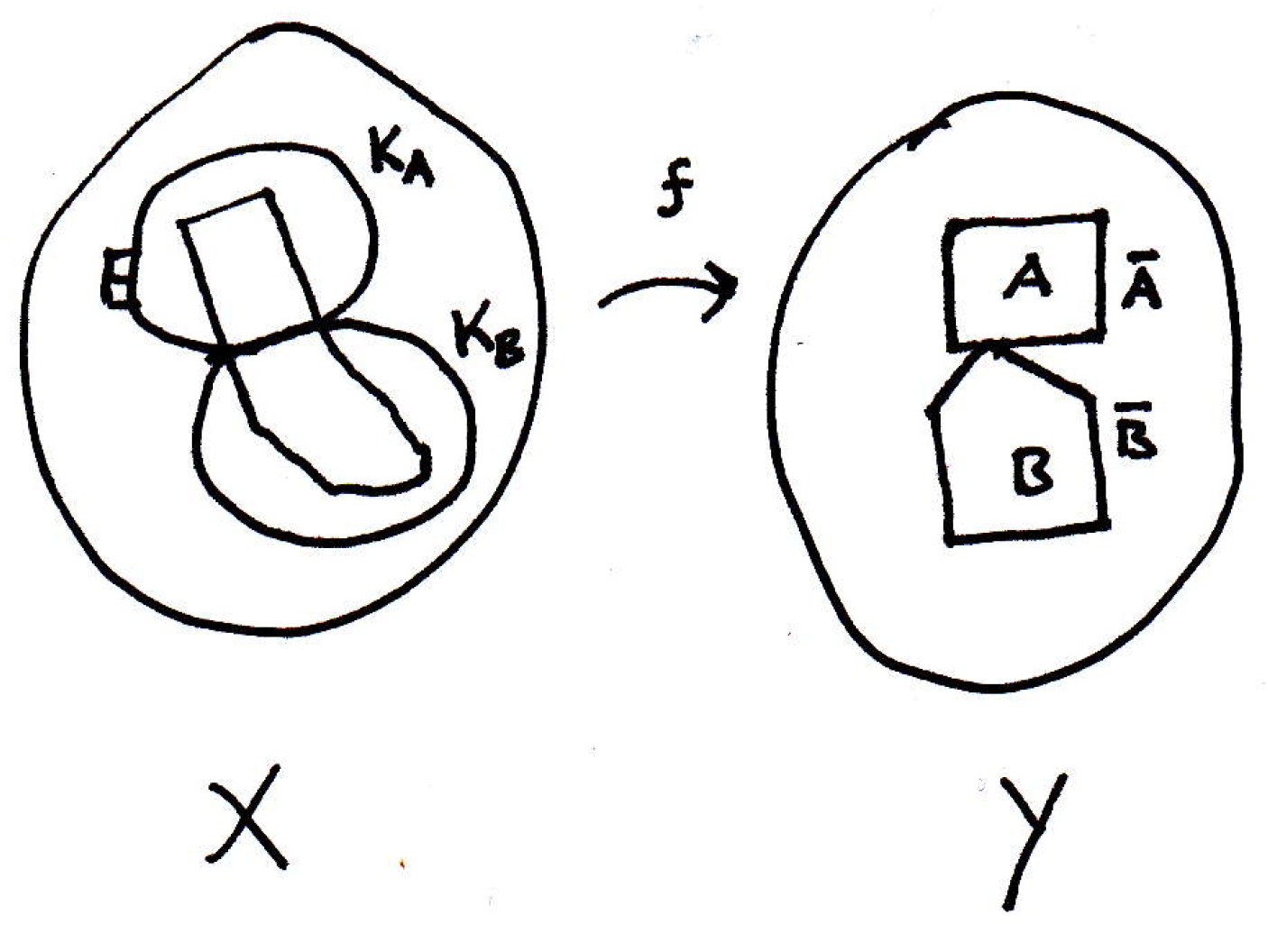

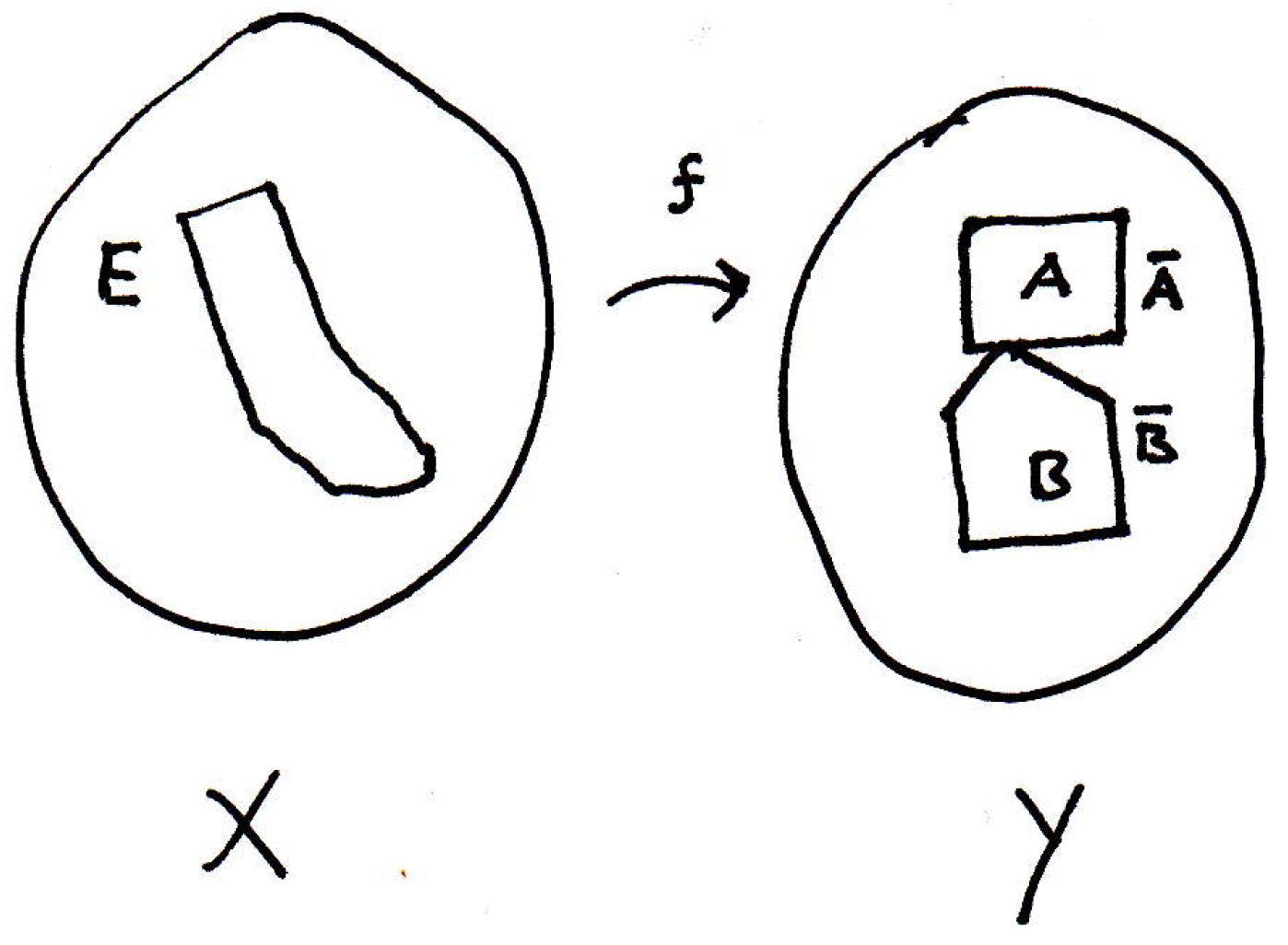

This is a very believable result. You cannot continuously deform a connected set into something that is not connected without introducing a discontinuity. How should we prove this? Let's draw a picture:

The claim is the image, , is connected. So what's the perfect way to start off this proof? What does it mean for a set to be connected? It cannot be separated. Not the union of two nonempty, separated sets. It's always easier to work with sets that are not connected so if we want to show the image is connected, then maybe we should construct a proof by contraposition.

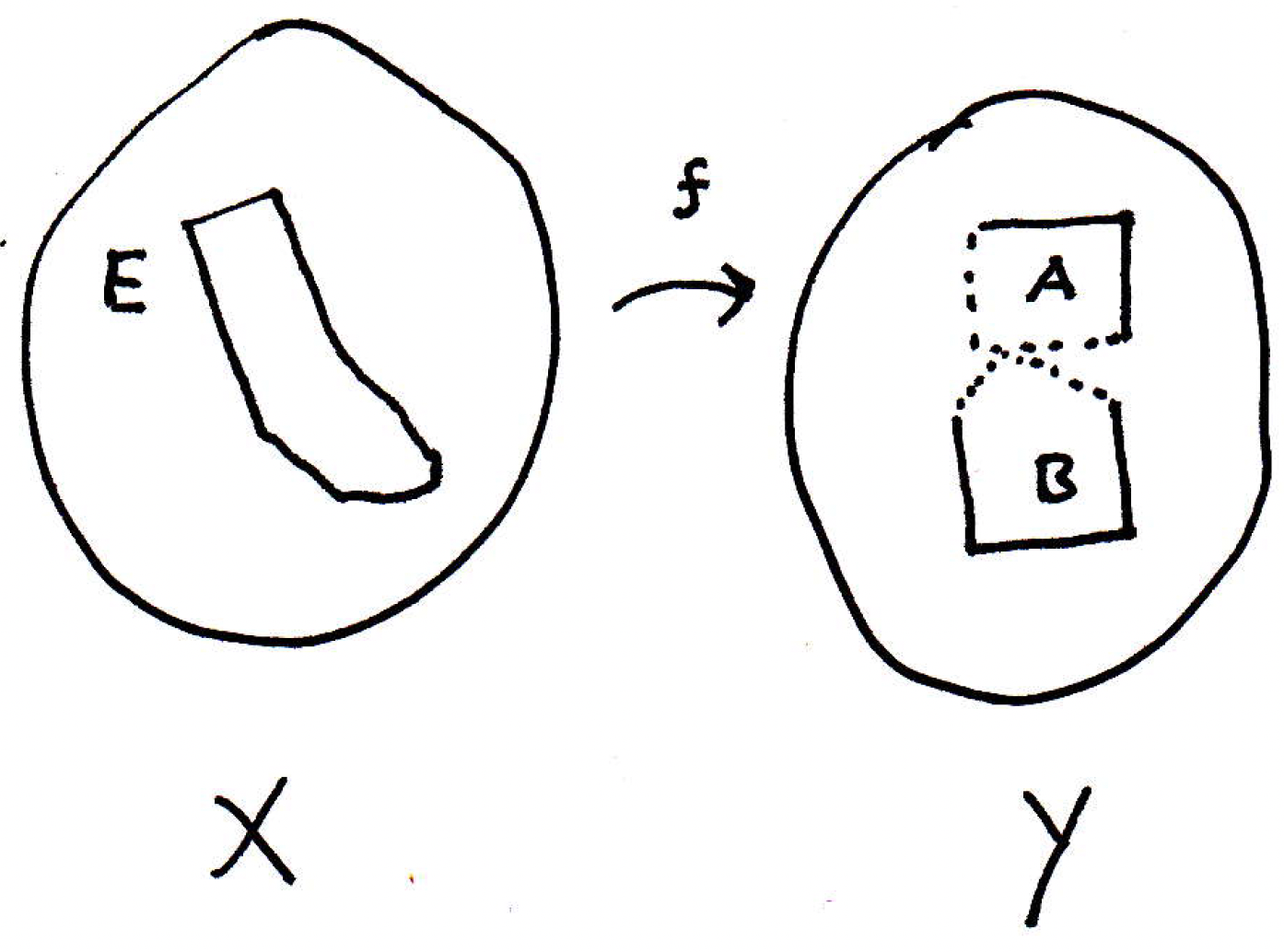

Suppose is not connected. Then is separated (our goal will be to show that is separated and therefore not connected); that is, , where and are separated sets (which means and are nonempty, and we have ). Let's draw a picture that cannot be true:

Now, is continuous, and that means all sorts of different things. Now, if and were both open sets, then we would have an easy proof on our hands. If is open, then because is continuous, the preimage of is open. The same goes for . So we would have the union of two open sets. In the sets are clopen.

We can look at the closures of and ; that is, we'll look at and :

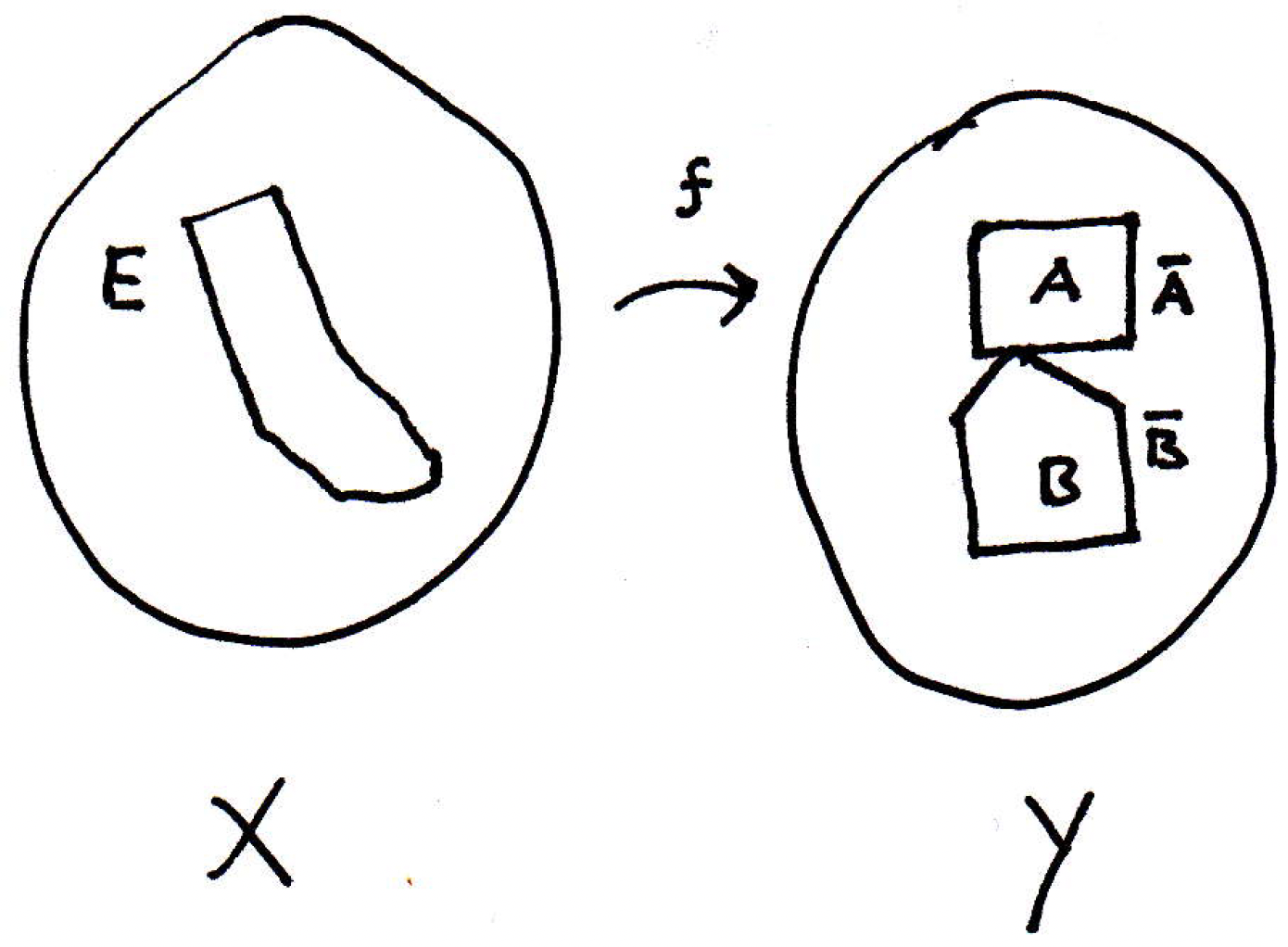

Now, what do we know about and ? They are both closed of course, but because is separated, we must have and . It might be the case that . (This is reflected in the picture.) What's the next thing we should do? We don't really know anything about and . They're not necessarily open or closed. If they're open, then we could take their inverse images and they would be open. If they were closed, then their inverse images would be closed. So let's take the inverse images of and . What do we know about those? They're closed. Let's denote these preimages as follows:

Both and are closed since is continuous, and they are sitting somewhere in , and maybe they look as follows:

What can we say here? What we want to do is use these sets somehow to produce a separation of , which would give us the completion of the proof by contraposition. So what's the separation that might be suggested? What could be a good proposal for a separation of ? We know and could not quite work because they might intersect (because and might intersect so their inverse images would intersect) as shown in the picture. What about the interiors? We might be in trouble because we might leave out some points right at the boundary. What about and ? These are smaller sets. Let's let

where we are intersecting and with because these inverse images may contain many other points in . Now let's update our picture to reflect this:

Then clearly and are disjoint because and are disjoint. So inverse images are disjoint. Are and nonempty? Yes. Why? Because and are nonempty. We hope to show and are a separation; that is, we want to show that the closure of does not have a nonempty intersection with and vice-versa. So our claim is that and separate . Let's see why.

Well, , which is closed. (We know that since .) Similarly, , which is also closed. Can we justify that the closure of does not contain a point of ; that is, can we show that ? Note that is the smallest closed set containing ; since is closed and contains it must also be the case that . Similarly, . Again, why is it the case that ? Well, is in , and we claimed that does not intersect ; that is . Why? We have , but is disjoint from , and is contained in the inverse image of , and . That is, we have that because and , and we also have that . So we've used the separation property here. And similarly you can show that . Therefore we have a separation. So is separated. And this concludes the proof by contraposition. So the image of connected sets is connected. No big surprise there, but it gives a very useful consequence.

-

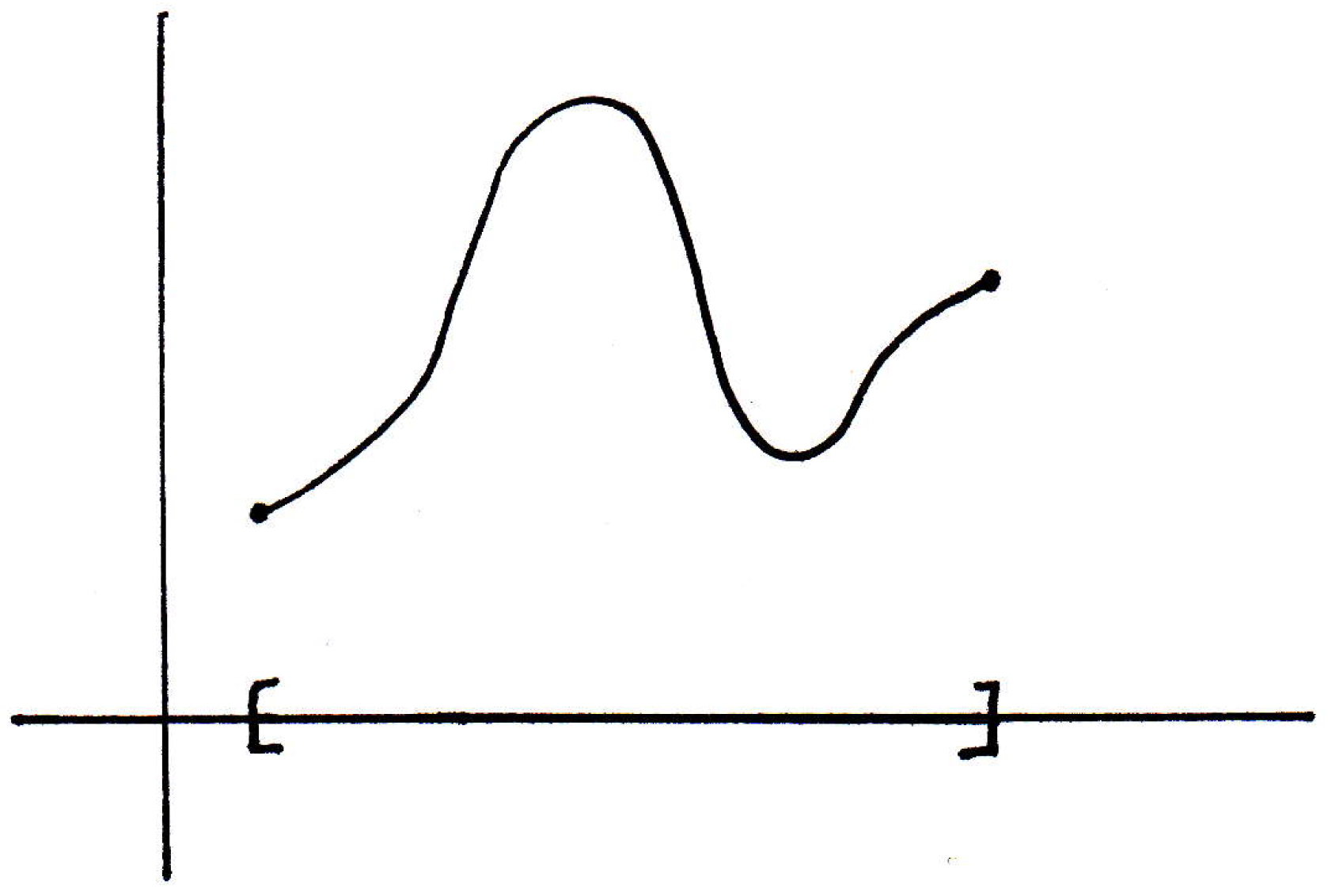

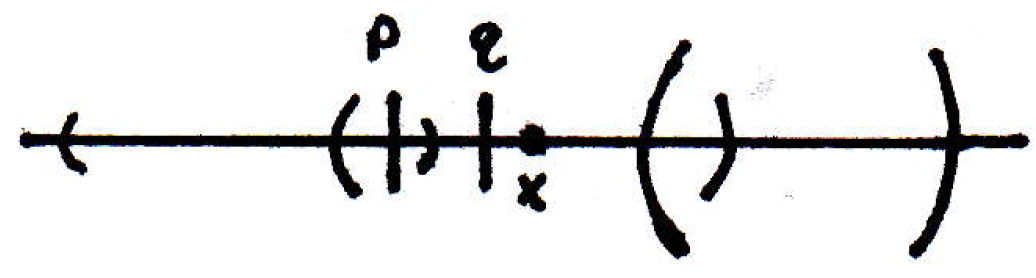

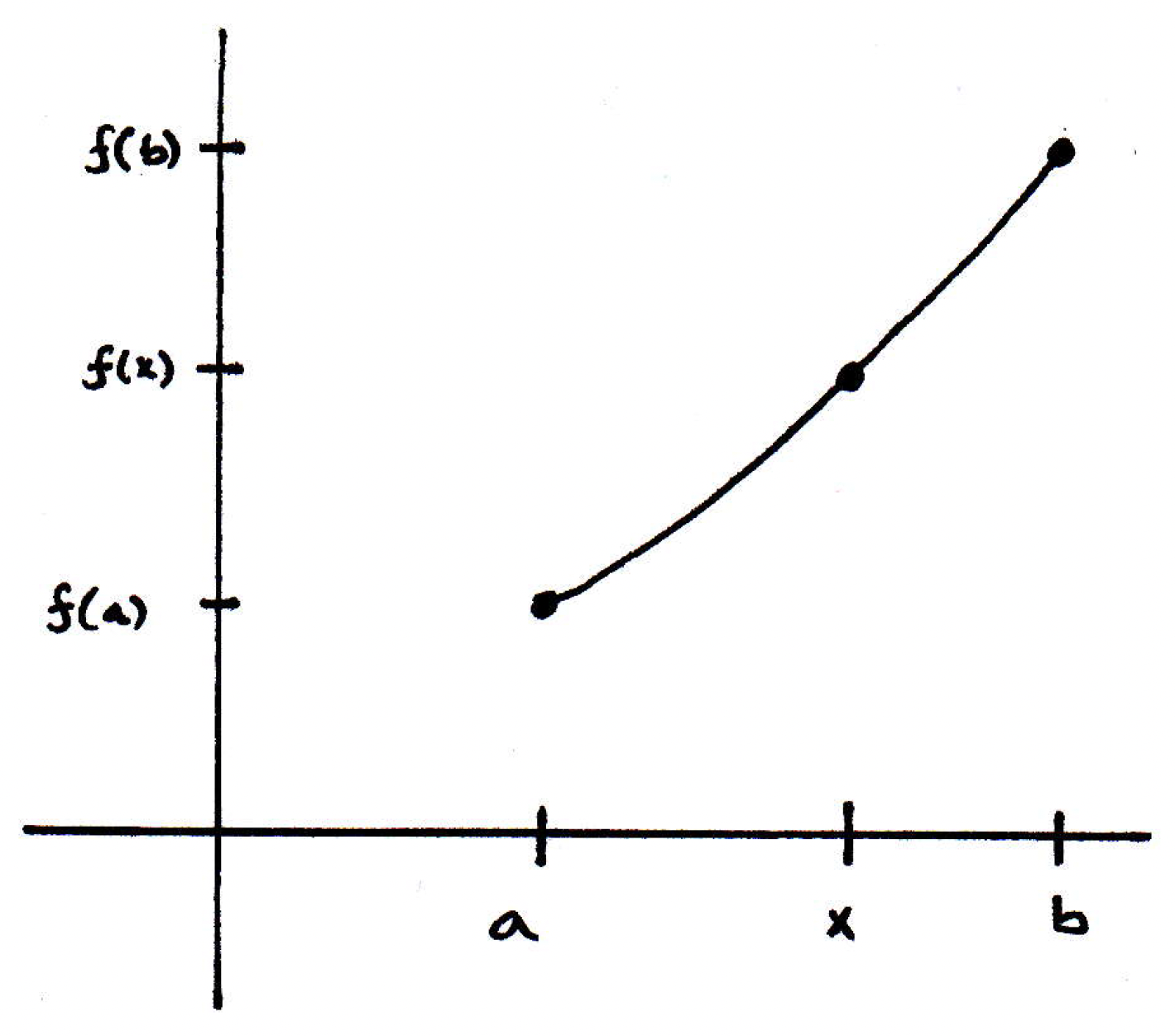

Intermediate value theorem (ideas): Can we see why this theorem is about dealing with images of connected sets? What does the intermediate value theorem say? It says that if is continuous, and , then there exists such that .

A classic picture of this situation is the following:

Why is this a consequence of the theorem about connected sets? Well, we have a connected interval . Its image is connected. Suppose the image never hits , but it hits and . Then the image would be disconnected. In fact, you could disconnect it using the part below and the part above . We claim that's a disconnection because the closure of a part above can at most contain . It can't intersect the other part. And vice-versa.

-

Intermediate value theorem (proof): The fact that is connected implies that the image is connected, but if is not achieved, then would disconnect . That's the basic idea. More formally, we would look at and which forms a separation. That's the basic idea.

-

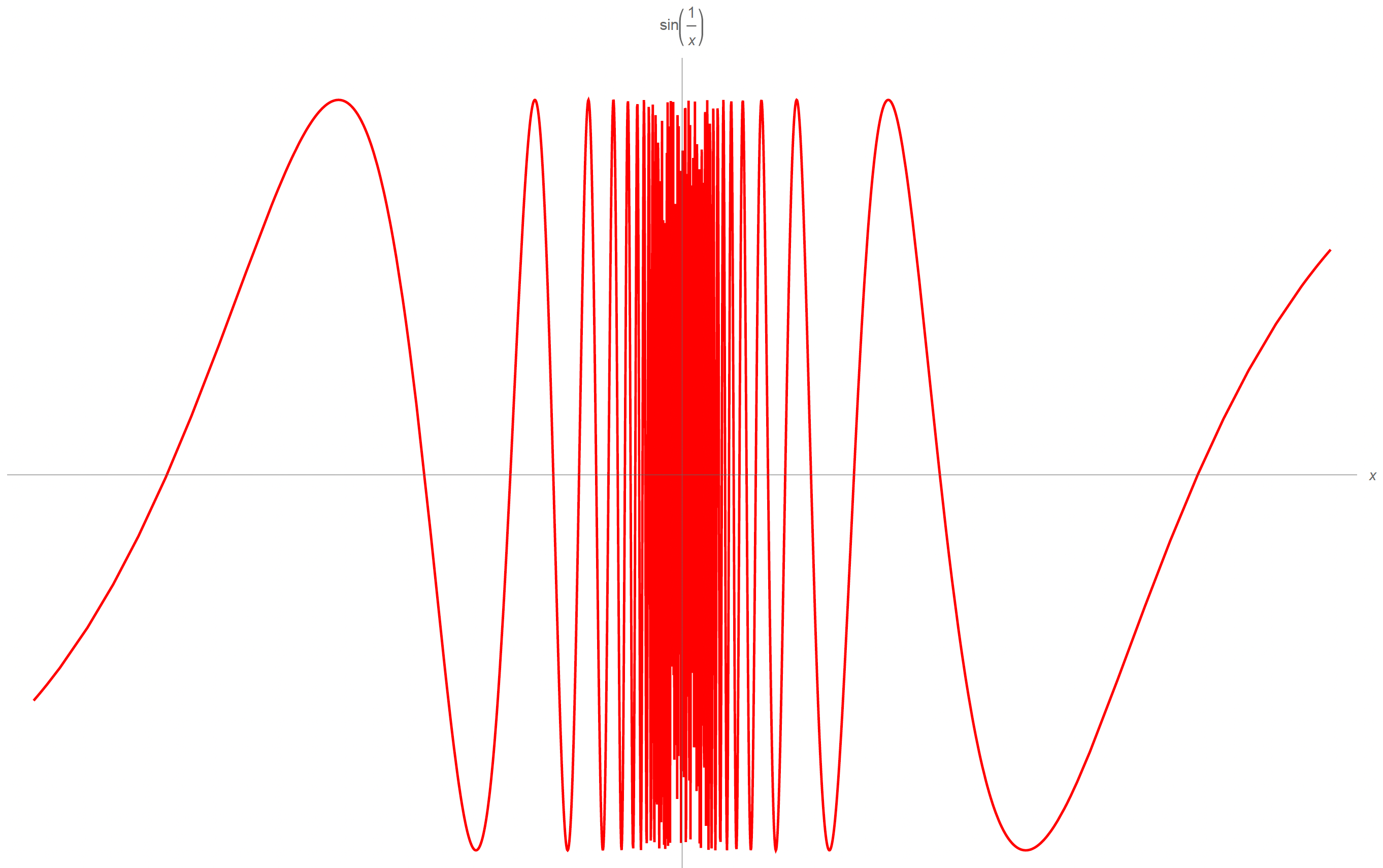

Converse of intermediate value theorem: Is the converse of the intermediate value theorem true? That is, if a function has an intermediate value property, must it be continuous? No. Consider the function

The graph basically looks like the following:

The claim is that the function above is not continuous at 0, but it satisfies an intermediate value property.

-

Next time (discontinuities): We know what it means for a function to be continuous everywhere. Is it possible for a function to be discontinuous everywhere? That's an interesting question. We'll explore it more next time.