19 - Series - convergence tests

Where we left off last time

Where did we leave off last time and where were we going? What are some more convergence tests we can use for series?

-

Recap: Last time we were talking about series which are special kinds of sequences. A series is a sequence of partial sums. Last time we asked ourselves how we can tell whether or not a series converges. So what if you have a series like

We should look at this as a sequence of partial sums:

or also (but less useful in terms of appearance)

And we wanted to know whether or not the sequence of partial sums converged. And what are some of the things we talked about last time? How did we test for convergence of a series? We can compare the given series to another series we know something about. The comparison test tells us to compare term by term to see if it's dominate in some way. What would we compare to, for instance? The geometric series is a classic series to look at when comparing things. We gave conditions for which converges, and we did this by making use of a clever comparison. The comparison test itself has its roots in what? The Cauchy criterion for sequences, when applied to series, gives a Cauchy criterion for series. We want to show that if the tails are bounded then we are in very good shape.

-

Note about root and ratio tests that follow: In what follows, the "high school" definitions of these tests will be given first, and then these will be modified appropriately based on our new knowledge.

-

The root test (high school; see [9]): We have three cases:

- If , then the series is absolutely convergent and therefore convergent.

- If or , then the series is divergent.

- If , then the root test is inconclusive.

-

The root test: Given , let . We have three cases:

- If , then converges.

Proof. If , choose such that . If , then that means there is a point in the sequence beyond which all the terms are smaller than . Thus, there exists such that implies that (by the definition of ). So now we are going to compare with the geometric series whose ratio is . But what does really mean and why is it useful? It's the same as saying that for . Well, we know converges, so converges also. So then why does converge also? By the comparison test. So this concludes the proof here.

- If , then diverges.

Proof. If , then what goes wrong here? Well, if , then we have by definition, and this means that there is a subsequence that converges to something bigger than 1. But if the subsequence converges to something bigger than 1, then that basically means the terms do not go to zero. That's the intuition here.

There exists a sequence such that . So what? Well, then for infinitely many terms so the terms do not go to zero and the series diverges.

- If , then the test gives no information.

Proof. If , then note that diverges but converges. Since in both cases, we realize the test is inconclusive.

In high school you let , but this is not the best way of stating this because the limit may not exist. But here's something that always exists: the limit may not exist but the always exists. Or it might be possibly infinite, but the point is that it can be calculated in the extended reals.

In terms of motivation, we realize that when we put that is kind of like . So the series kind of looks like the geometric series in some way. This was the motivation for comparing to the geometric series. (Many proofs will involve comparisons with the geometric series.) As a side note, the root test is way more powerful than the ratio test, but the ratio test is often easier to use. The reason the ratio test is not always so powerful is because there are sequences that converge but for which the ratio we look at does not give much information. So you might look at a sequence like

Well, the successive ratios will be 1, , 1, , etc. The ratio test here is not going to tell me much. That's why the ratio test is not always so powerful.

-

The ratio test (high school; see [9]): We have three cases:

-

If , then the series is absolutely convergent and therefore convergent.

-

If or , then the series is divergent.

-

If , then the ratio test is inconclusive.

-

-

The ratio test: The series

- converges if , and

Proof. If , then once again we have for some with . How does this help me compare? And what are we going to compare to? The geometric series again! Well, from we can make the connection so each time we're introducing at most a factor of . What does this mean? We could keep going:

What you find eventually is that you can go as far back as you want, and you just introduce a new each time. So what you could see is (by the same argument)

So let's start at and look at the tail: , but this latter sum converges thus bounding the tail. And this is enough to give us the convergence of our series.

- diverges if for all , where is some fixed positive integer.

Proof. For divergence, we can see that the terms do not go to 0.

Power series

What are power series and what are some observations we can make about them?

-

Motivation: One of the most important series you will encounter is a power series.

-

Power series (definition): What is a power series? Well, a series is basically just a sum of a bunch of numbers. A power series might involve variables. Given a sequence of complex numbers, the series

The above is a power series. It's a series of powers of , and here you think of some complex variable in .

-

Natural questions: Given a bunch of constants , a natural question is to ask for what values of does the power series converge? The nice answer to this question is that we can use our knowledge of convergence tests to answer this.

-

Convergence of power series: If , let . Then converges if , and diverges if . The number is called the radius of convergence.

The surprise is the set of all places where the series converges actually forms a disk. It is a circle in the plane and is centered around 0 in this case. It's amazing it's a circle, but you can see where it comes from because the proof involves the root test. In this case, with , we have . What you're hoping is that the of this stuff is less than 1 (we will apply the root test). So we'll write

Thus, here we're really looking at just comparing with . That's where the comes from. We're just using the root test. This is the proof idea.

Combining sequences

How might we combine sequences in a meaningful way?

-

Motivating question: Given two sequences and , we may be interested in how to combine these sequences and study them. What might we be able to say about the sum of these sequences? Can we say anything meaningful about ?

-

Summation by parts: Let

for , and set . Then

How is this like integration by parts (from which you've seen in early calculus classes)? What are some general features of this formula that remind you of integration by parts? Integration by parts says something loosely along the lines of

Integrals are like sums. So if we want to integrate here, then what's playing the role of and here, respectively? What's playing the role of the integral here? The sum, . What's playing the role of and here? We have playing the role of , and if we let be the partial sums (as it is defined above), then the change in is little at every step.

So we basically have and . Then we have and . So we almost get

is basically

Why do we have the two terms at the end of the summation formula? We have to evaluate at the endpoints. So we have

where circles have been added to show where things are slightly off. The upshot is that integration by parts and summation by parts are really the same concept. Showing why summation by parts holds for sequences actually gives you a visual for integration by parts in the discrete case. Down the road, we'll see that summation is actually integration with respect to a certain integrator. So summation can actually be thought of as a special case of integration, where you're integrating over a discrete set.

-

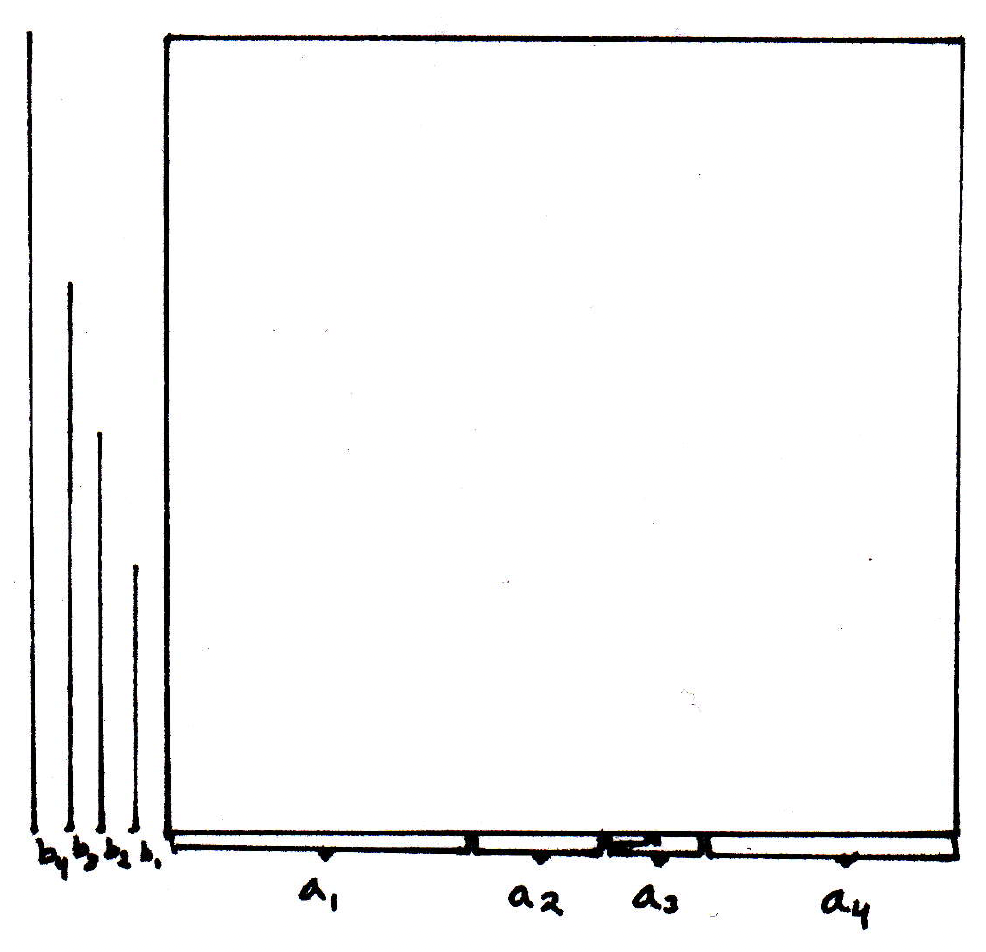

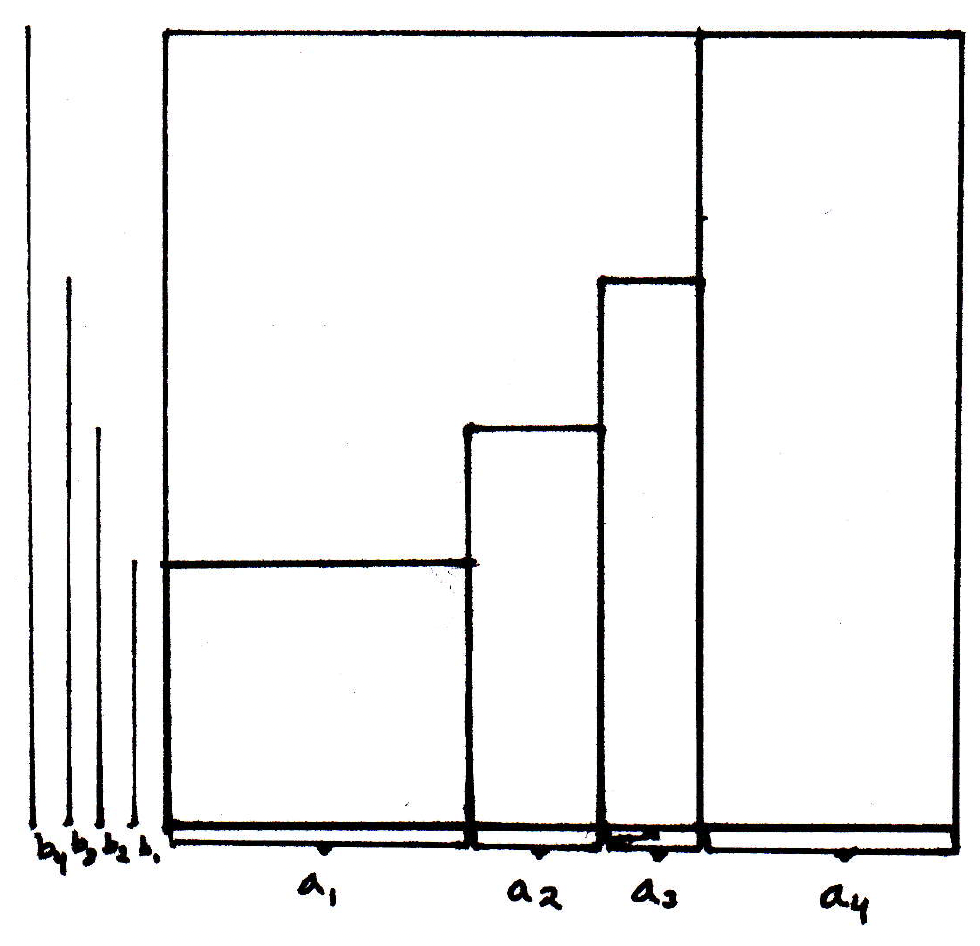

Summation by parts (proof idea): The algebraic proof gives no insight whatsoever. This is the geometric idea. Imagine a square that's broken up into little pieces(here we will just consider 4 breaks in both directions):

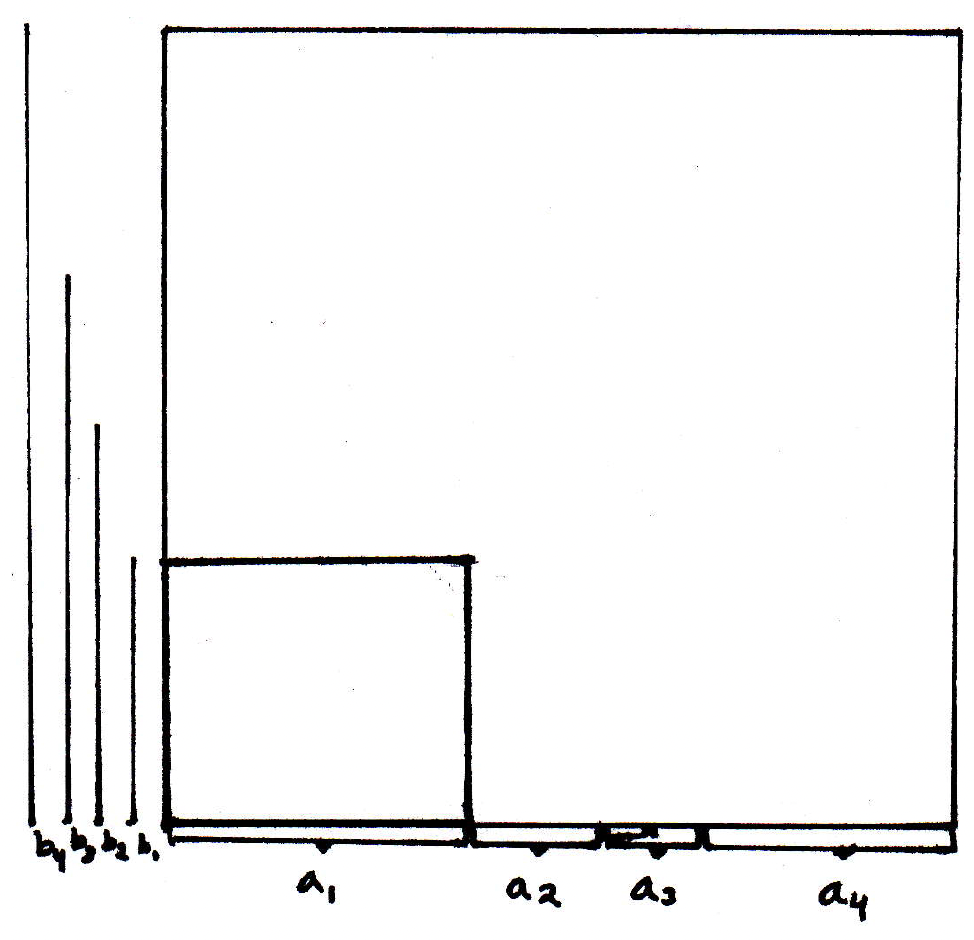

Then we can visualize in the following way: We get the following for the first summand (the areas are the rectangles):

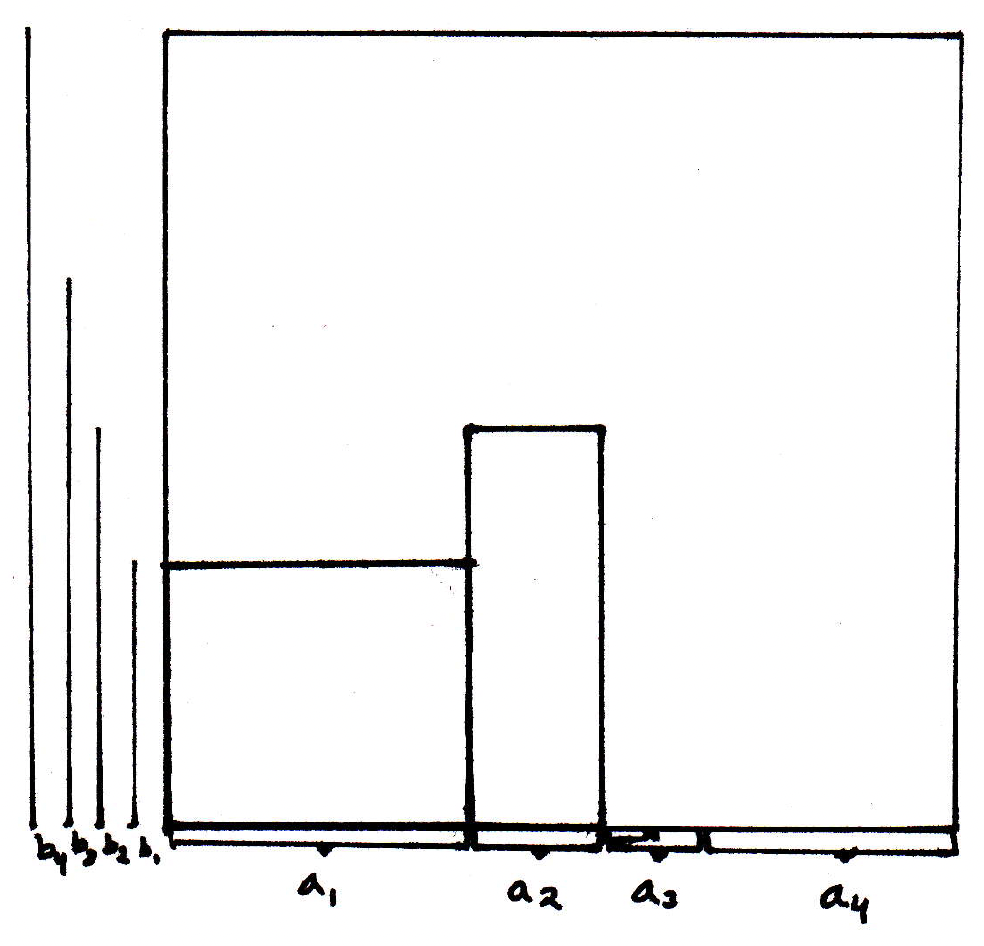

The second summand:

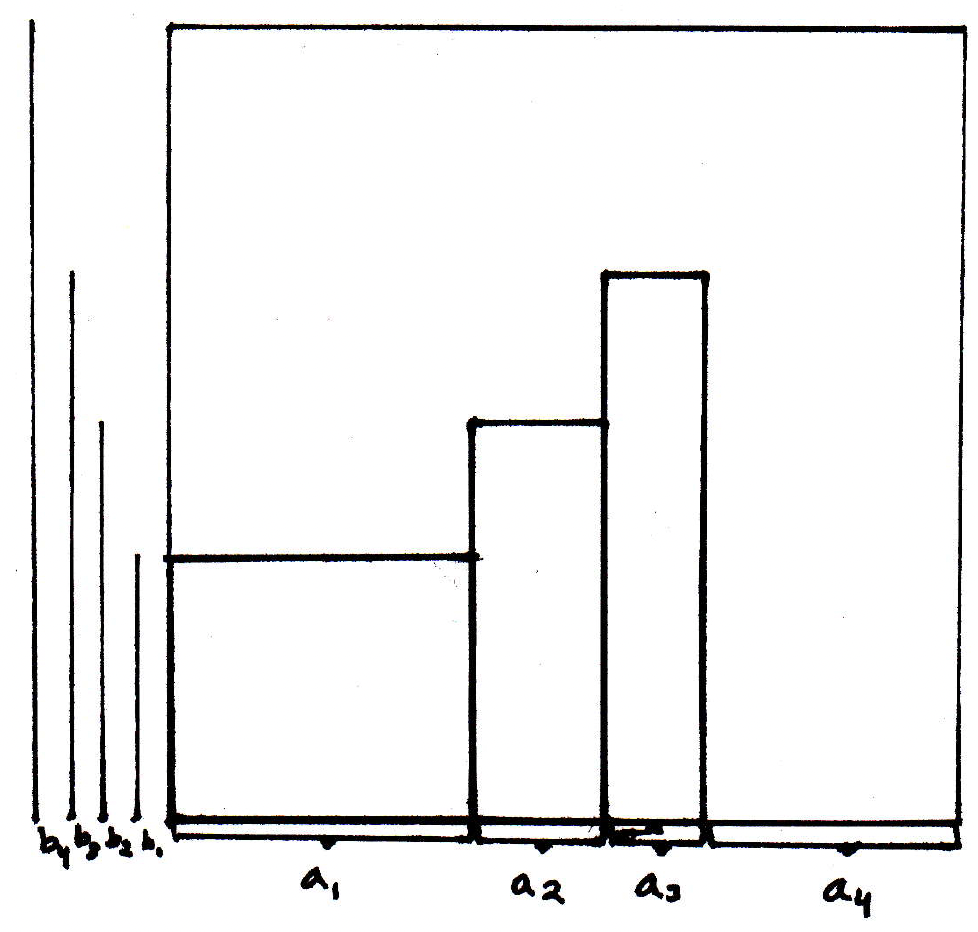

The third summand:

The fourth summand:

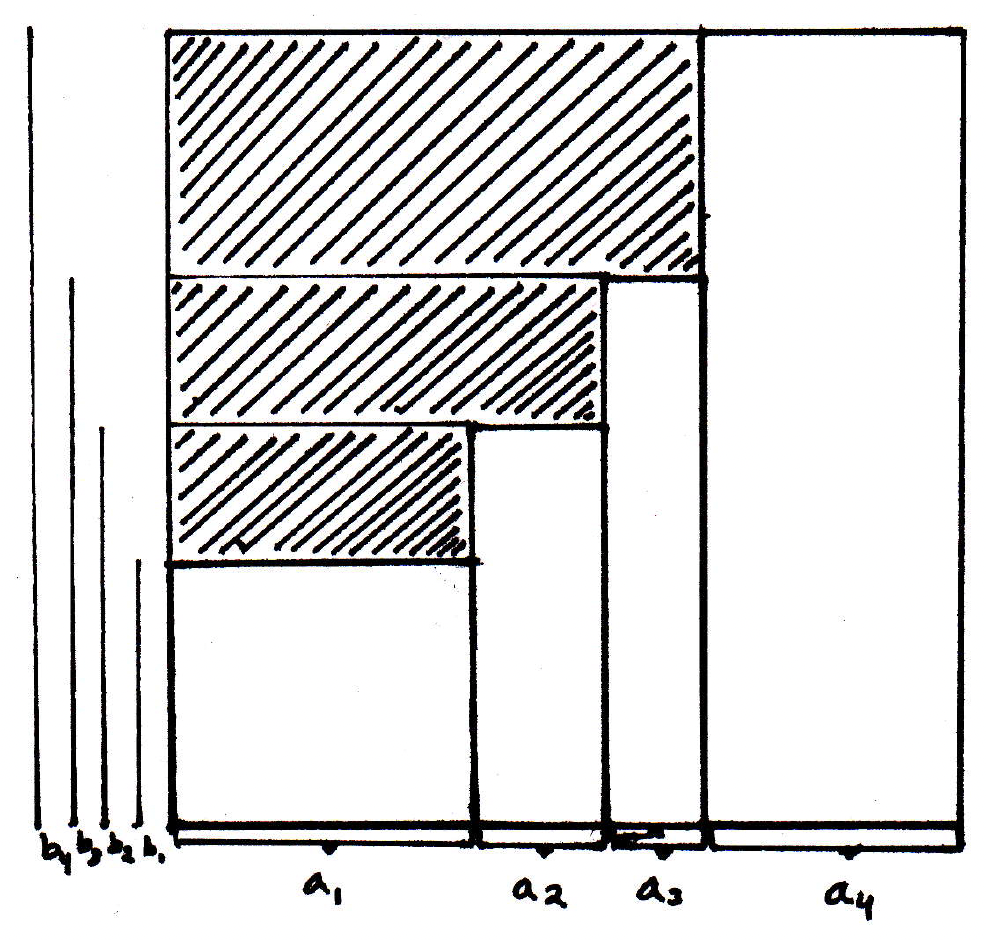

Now we can see the relationship with integration by parts. If and , then integration by parts says to find the areas of the rectangles in question, we should take the whole area of the box and subtract out the sum of the areas of the dashed rectangles below:

Remember the formulation for integration by parts:

We can see the relationship. For the algebraic proof, see [17]. This leads us to the following theorem.

-

Theorem (see [17]): Suppose

- the partial sums of form a bounded sequence;

- ;

- .

Then converges.

Basically this is saying that of the partial sums involved in are bounded and , then we are in good shape.

-

Proof of theorem (idea): Suppose , , and . Fix . Then there exists such that . Recalling

we can bound this in a meaningful way for :

We've just bounded the tails by which is what we need for the Cauchy criterion.

-

Example: Suppose and that the alternate in sign and go to zero. Then the claim is that converges. This is the alternating series test. The proof follows from our proof for the theorem above with (its partial sums are basically just 1s and 0s so it's bounded) and .

-

Sums of series: We have .

-

Products of series: It's not defined quite as you would think, namely . Let's see if we can motivate why this might be the case and where the generally accepted definition comes from.

The motivation actually comes from power series. With power series we might have a series that starts off like

and another one like

If we consider the product

how should one go about evaluating this? What would you get? What's the first term? We'd get

With this motivation, the following is how we define the product of two series: Given and , we put

and we call the product of the two given series.

-

More motivation for product definition of series: Another reason the product of two series is defined the way it is and not what you might expect is that if

and and , then

will actually not converge to . So that would not be a good notion of a product. But the way we've defined our product of series does converge how you would want it to under certain conditions.

Here's the problem: The sum may not converge even if and do, but if and converge absolutely to and , respectively, (we'll define what that means momentarily) then converges to .

Absolute convergence

What is absolute convergence and why is it important?

-

Idea: Absolute convergence is basically a kind of convergence where you look at absolute values.

-

Absolute convergence (definition): The series converges absolutely if converges.

-

Example involving absolute convergence: Consider the series

Does this converge? Yes, it's a sum of alternating terms that decreases and goes to 0. Does it converge absolutely? No, since its absolute series is going to be the harmonic series.

-

Theorem: absolute convergence implies convergence: If converges absolutely, then converges.

Why? If we're estimating the sum, then the Cauchy criterion tells us so look at the tail , but that's bounded by . Thus, we see that

but is the tail of the absolutely convergent series, and thus we know is small by the Cauchy criterion for . So if we can make small, then use the same for a given to make small, and then you're in good shape.

Rearranging series

What are rearrangements of series and why are they important?

-

Motivation: We've seen one place where absolute convergence might be important, namely when trying to look at the product of two series. Another situation where things might go really bad if a series does not converge absolutely has to do with rearranging the terms of a series. So if you take a series, then you might naturally ask what happens if you sum things in a different order? So suppose . If we rearrange the terms, must still converge? No. If it does converge, then must it still converge to ? The answer to this is a big, resounding no as well. It will be informative to look at an example of where things can go bad.

Consider the series mentioned previously, namely

But guess what? Not only would a rearrangement not necessarily converge to , but we could get it to converge to any number you like. Seriously? How about ? This is a result due to Riemann (not the -result): If converges but not absolutely, then a rearrangement can have any limit you like. (It's really even worse than this; it can have any or that you like.) So it may not converge, but basically you can get it to do whatever you want. If does converge absolutely, however, then all rearrangements have the same limit. This is an amazing fact; indeed, it is one of the main reasons why we care about absolute convergence.

So let's see how we can get

to converge to by using a suitable rearrangement. Take a look at all of the odd terms. They are all positive. What can we say about the series of odd terms? It diverges. What about the even terms? They are all negative and diverge as well. So the positive and negative terms get as large positive or large negative as you like. The basic idea is this: We can start by summing the positive terms until we slightly overshoot and then start summing the negative terms until we slightly overshoot in the other direction. And we keep on doing this (so we'll get a series that converges to ):

This is an amazing fact about rearrangements. If your series is not absolutely convergent, then you can use rearrangements to get it to do whatever you want.