25 - Taylor's theorem

Where we left off last time

What are some applications of the derivative? Where did we leave off last time and where were we going?

-

Recap: We have been talking about the derivative. Last time we defined the derivative. We said what it means for a function to be differentiable, which basically means that a certain limit exists. The derivative is the limit of a difference quotient, where the difference quotient represents the slope of a secant line. We say that a function is differentiable at a point if the limiting slope of a secant line actually exists. We also talked about a very important theorem which basically is the most important theorem when it comes to derivatives. It connects the value of the function to the value of the derivative. It was the mean value theorem. Today we'll see how it's important and talk about a generalization known as Taylor's theorem. (Taylor's theorem is really just a generalization of the mean value theorem.) That will be the first part of this lesson. The second part we will discuss sequences of functions, which is a different topic.

-

Taylor's theorem (motivation): Suppose you know something about a function and what it is doing at a particular point. The idea of Taylor's theorem is, well, if you know about what's happening at a point, then you know what's happening near a point, if it's differentiable, if it's twice-differentiable, or if you have a number of derivatives. In the simplest case, suppose we know what a function is doing at a point , and we want to approximate what's happening near , say at a point . That is, given we know something about , suppose we want to approximate , where is near .

Well, the mean value theorem tells us something about if we know and the derivative nearby. So the mean value theorem basically says I can figure out what is if I know and I know something about the derivative nearby:

Observe how this is a restatement of the mean value theorem. And the thing to notice here is that there is a mysterious point , and all we know about is that it lives between and . That is, we have for some . So we have no idea what point is. The way to think about this is that we will know if we know and , a term we have little control over or know much about. What we do know is that this term is something like an error term. It tells us how far off is from . So we can think of the formulation like so:

This "error term" is often not precisely known. But it has something to do with the derivative nearby. So if we can bound the derivative nearby, then maybe we can actually say something about that error. That is one way of thinking about the mean value theorem.

This actually suggests that we might be able to do better with our approximation. We don't know what is doing at . We don't even know where is. But what if we knew what the derivative was doing at ? Then maybe we could do better. This suggests that maybe

where this "error" term is hopefully smaller than the error term before. So this suggests there might be a theorem like this: can we fill in this process where the error terms get smaller and smaller? And the answer is yes. If our function were twice differentiable, then we get as our error term, where this is not the same as that in the first statement but just some . Notice that the error term is a little bit better than what we originally had because if is really small, then is really small. But once again, we have no control over where is. We just know .

So this is sort of the direction Taylor's theorem goes in. It gives us some sort of idea of how good this particular error term is. What's the moral of this story? The moral is that if we know a function value and its derivative , and we know its second derivative in some neighborhood of , then we will have a good handle on what is.

Of course, we could continue the process above. We could say the error term in

is kind of like a second derivative. So maybe if we thrown in a term like , where is replaced by (i.e., we would have), then we will yet still get a better error term. That's really the direction that Taylor's theorem goes into.

-

Taylor's theorem (statement): Generally, if

Here's what we should notice: where do the 's appear in the expression above? The first term is just a constant with respect to . There's an in the next term, an in the following term, and so on. So we see that is a polynomial in (or really a polynomial in ) and its degree is . What is the dependence on ? To determine the coefficients, note that is involved with every single one of them. So is a polynomial, and it is often called a Taylor polynomial.

-

Taylor's theorem (statement): If is continuous on and exists on , then approximates , and

where .

Note that the error term here looks a lot like the terms of the Taylor polynomial except that the th derivative is evaluated somewhere between and . What does this theorem say in the case that ? Can we prove this theorem in the case where ? Yes, what is it? (Note that when .) Well, the function is just saying "tell me what's going on at ," and add what? We'd add . That is, for , we should have

Is that true for some ? Yes, by the mean value theorem. When , this literally is the mean value theorem. So Taylor's theorem really is just a generalization of the mean value theorem.

Now, is the "best" approximation of order at . What do we mean by "best" here? Well, what we mean is we've constructed a polynomial that has all the same value in derivatives up to the th; that is, has the same value of at . That is, we have the following correspondence (at ):

So the first derivative of the polynomial and the first derivative of both have the same value at . Let's just convince ourselves of that. If we look at the polynomial

at , then we see that we have

because all of the other terms go to zero.

What happens if we take the first derivative of ? What happens to the term? It disappears because the derivative with respect to is gone. What happens when we take the derivative of with respect to ? We just get . And the rest of the terms have positive powers of in them and hence evaluate to zero at . So .

Similarly, if we look at , then we have the following:

Now it should be clear where the factorials are coming from. They are needed to cancel the powers that are coming down from the differentiation process. So if you want the th derivatives to match up, then you will need the underneath. So how do we prove this?

-

Taylor's theorem (proof): For some number , the statement

is true (for some suitable choice of ). Let , where we hope is small. Equal? Not necessarily. So let's amend our definition of using the -value above we claimed existed:

Why would we do this? What are we hoping for? Where is this headed? Well, it sure would be nice if we could show that were 0 somewhere and maybe were the coefficient . What happens if we take the th derivative of ? We'll have

We claim it is enough to show that for some . Why would that be enough for what we are interested in? If we could show that

was zero somewhere, then that would make . That would establish that is, in fact, the th derivative of at some divided by ; that is, we would have

Let's check a few things about . We would have the following:

So, in fact, what we've done is constructed a function that is very flat at . Also, note what happens when we look at . What happens? We have

Why? This is due to (1). The definition of made this true.

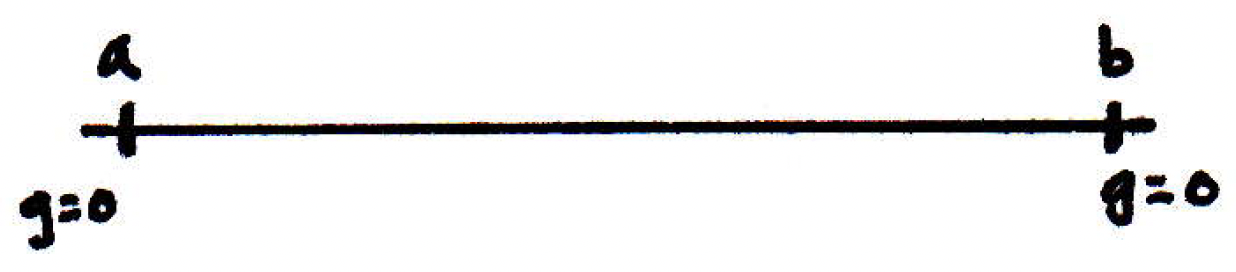

Here's what we have, something that looks like this (we know is 0 at both and ):

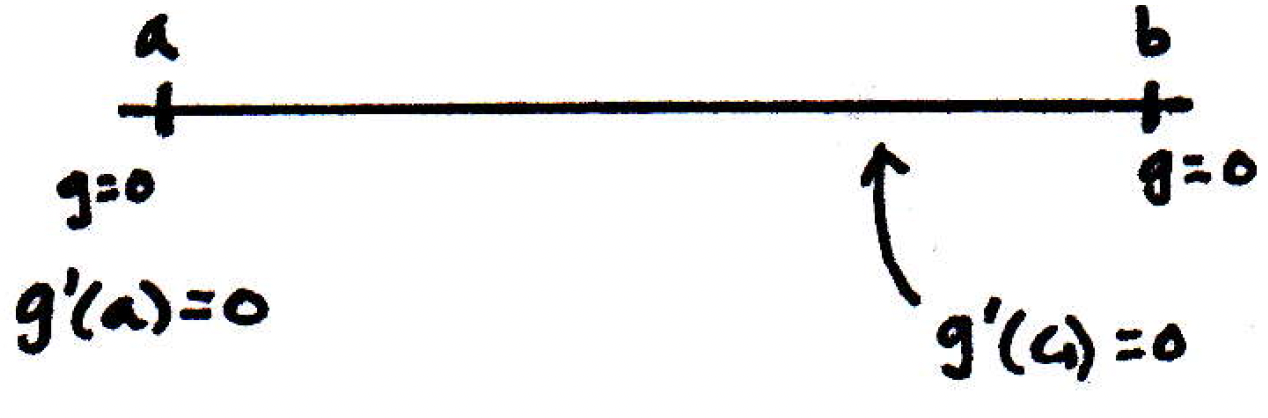

So if we have a function that is 0 at both ends, then it either has a maximum or a minimum in between. Therefore, there's a point in between whose first derivative is zero. We don't know where that point is. Maybe it's here:

But what do we know about ? It's also zero:

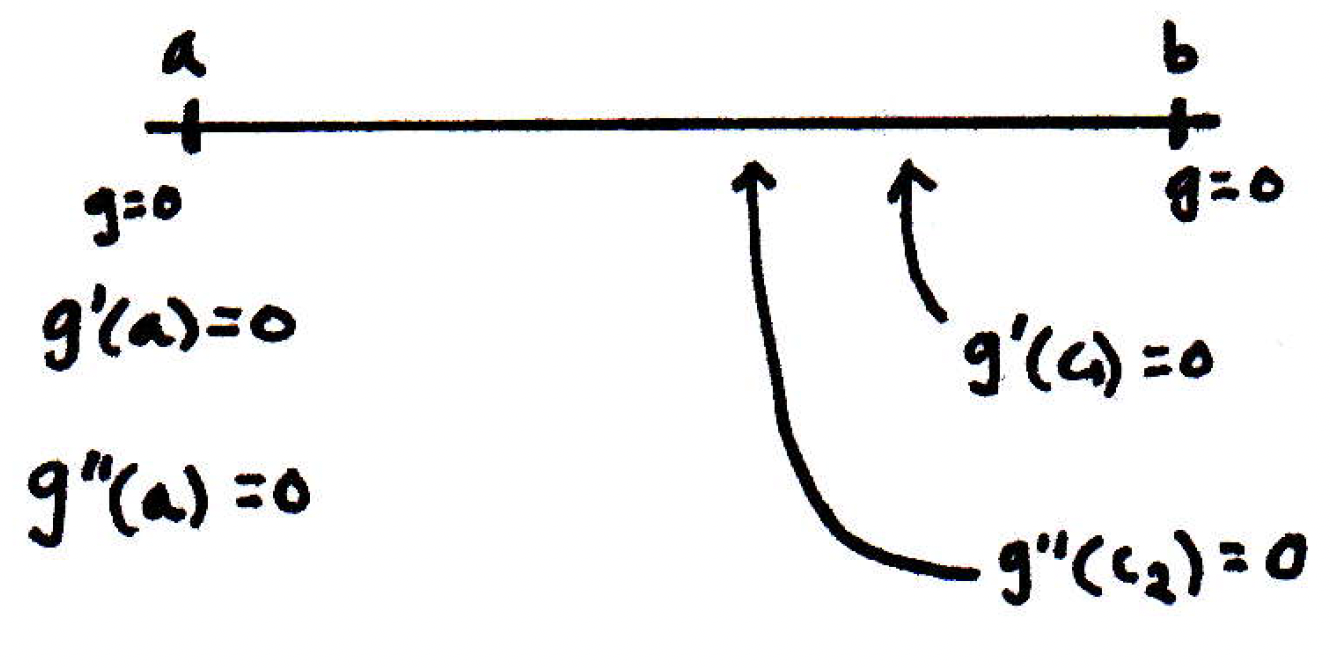

So if we have that is zero at both and , then there must be a point between and where the second derivative will be zero:

And we know that is also zero:

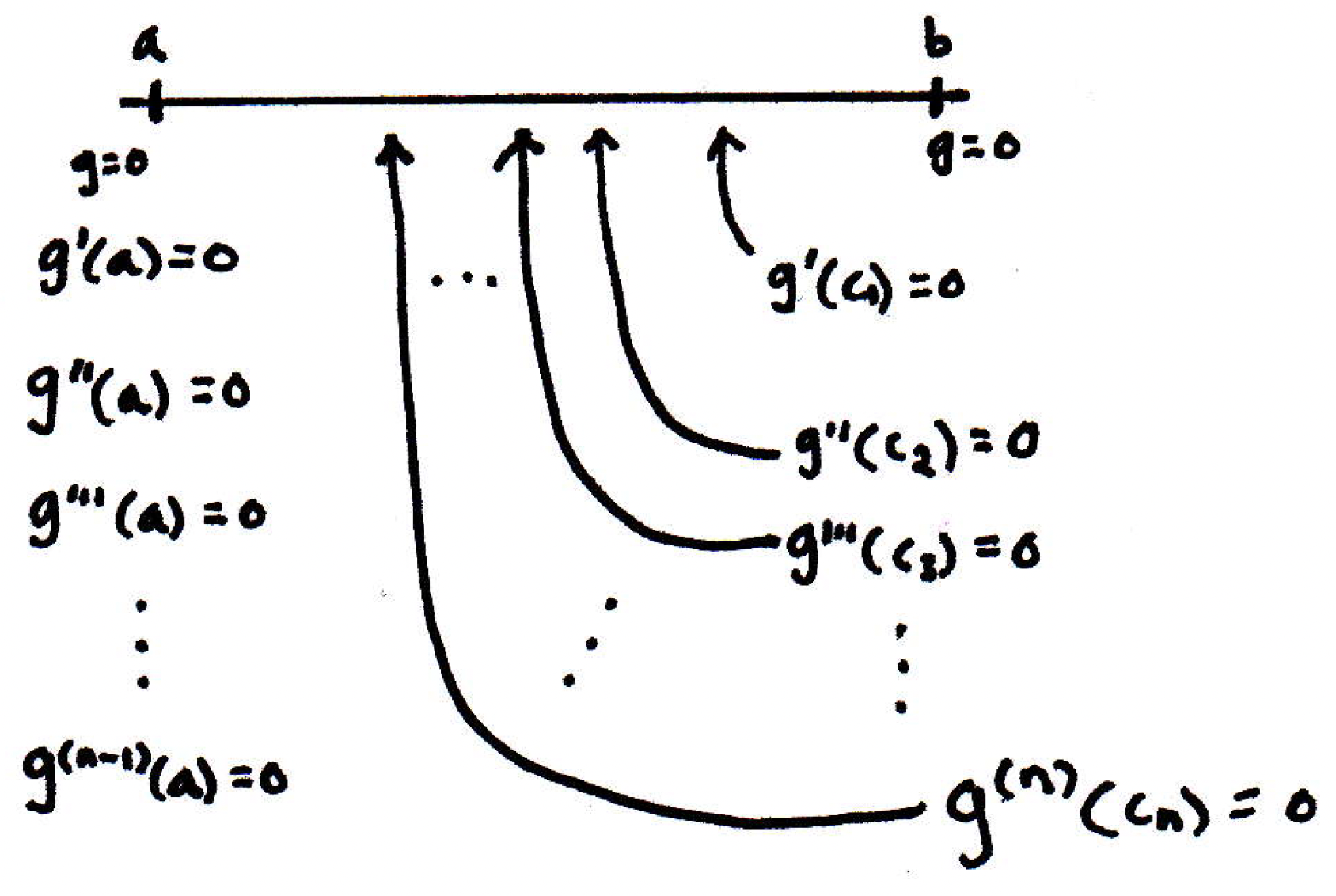

We can keep going. How far can we keep going? Well, the very last step is to postulate that there is a number (this will be our chosen ) such that , where and :

Is that what we wanted to show? Yes, this is what we wanted to show. What is the upshot of all of this? Why does that show that ? We can plug back into (1), and now (1) is true where the in (1) is playing the role of the in . You want to know what is in relation to , then that's where (1) finishes the argument. This shows that with in (1) we get the desired result.

We should note that we used the mean value theorem several times in the argument above. We were basically bootstrapping down the derivatives through a clever choice of function.

Sequences of functions

What's special about sequences of fequences? What are they and what are they useful for?

-

Preview: We've spent a great deal of time building up a lot of machinery in the first 5 chapters of Rudin that has allowed us to think about the real numbers carefully. We also built up machinery that allowed us to think about functions carefully (what it means for a function to be continuous, differentiable, etc.). What we are going to do now is point towards some ideas that will soon be encountered. One of the big ideas is that it's not just the real numbers or that we're interested in. We built up all that machinery for metric spaces. What's it useful for? One of the big places where it's used is in thinking about functions living in their own metric space.

-

Sequences of functions: This is actually one of the main motivations of analysis. Here's a question: We've said what it means for points to converge, but what does it mean for a sequence of functions to converge? Suppose we have a sequence

What does it mean for a sequence of functions to converge, where we are thinking of these functions as real-valued functions? What would it really mean to say a sequence of functions converges? One way to answer this question is with the notion of pointwise convergence. Another is uniform convergence.

-

Informal aside about pointwise and uniform convergence: As noted here, pointwise convergence means at every point the sequence of functions has its own speed of convergence; that is, the speed can be very fast at some points and extremely slow at others. So imagine the sequence of functions :

Think about how slow this sequence tends to zero at more and more outer points. Uniform convergence, on the other hand, means there is an overall speed of convergence, and one can check uniform convergence by considering the "infimum of speeds over all points." If it doesn't vanish, then it is uniformly convergent.

-

More formal aside about pointwise and uniform convergence: It may first help to unpack the definition of limit in pointwise convergence and compare it to that of uniform convergence. The following definition for pointwise convergence is in [17]:

Pointwise convergence (definition). Suppose , , is a sequence of functions defined on a set , and suppose that the sequence of numbers converges for every . We can then define a function by

If this is the case, then we say that " converges to pointwise on ."

The following definition for uniform convergence of sequences of functions is in [17]:

Uniform convergence for sequences of functions (definition). We say that a sequence of functions , , converges uniformly on to a function if for every there is an integer such that implies

for all .

Rudin (in [17]) makes the following note about the difference between pointwise and uniform convergence:

Difference between pointwise and uniform convergence. It is clear that every uniformly convergent sequence is pointwise convergent. Quite explicitly, the difference between the two concepts is this: If converges pointwise in , then there exists a function such that, for every , and for every , there is an integer , depending on AND on , such that holds if ; if converges uniformly on , it is possible, for each , to find ONE integer which will do for ALL .

Hence, pointwise convergence means that for each and we can find an such that (blah blah blah). Here the is allowed to depend both on and .

In uniform convergence the requirement is strengthened. Here for each you need to be able to find an such that (blah blah blah) for all in the domain of the function. In other words, can depend on but not on .

The latter is a stronger condition, as noted in Rudin, because if you have only pointwise convergence, then it may be the case that some will require arbitrarily large for some -values.

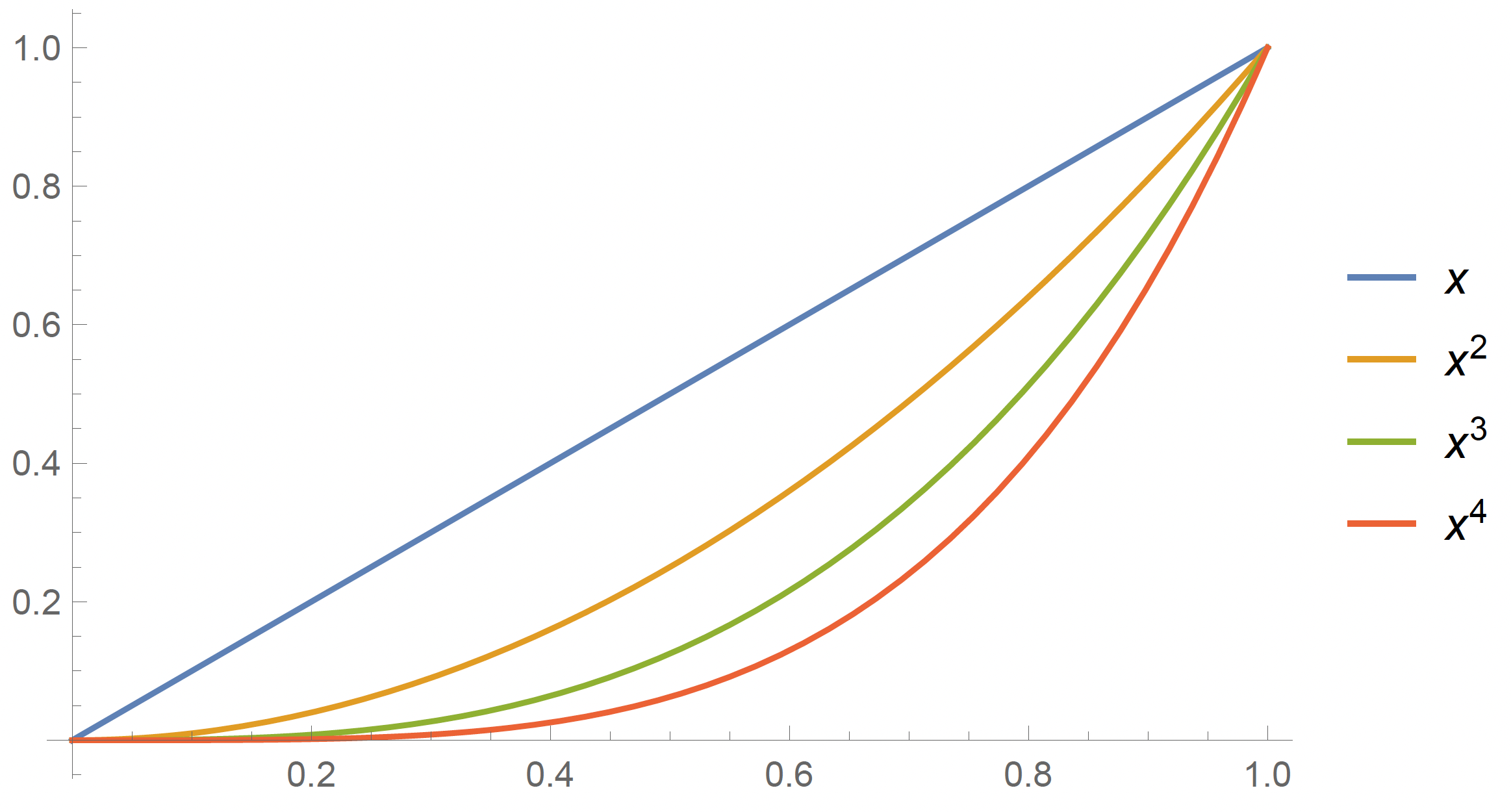

For example, the functions converges pointwise to the zero function on , but do not converge uniformly. For example, if we choose , then the convergence condition boils down to . For each , we can find an easily, but there's no that works simultaneously for every .

-

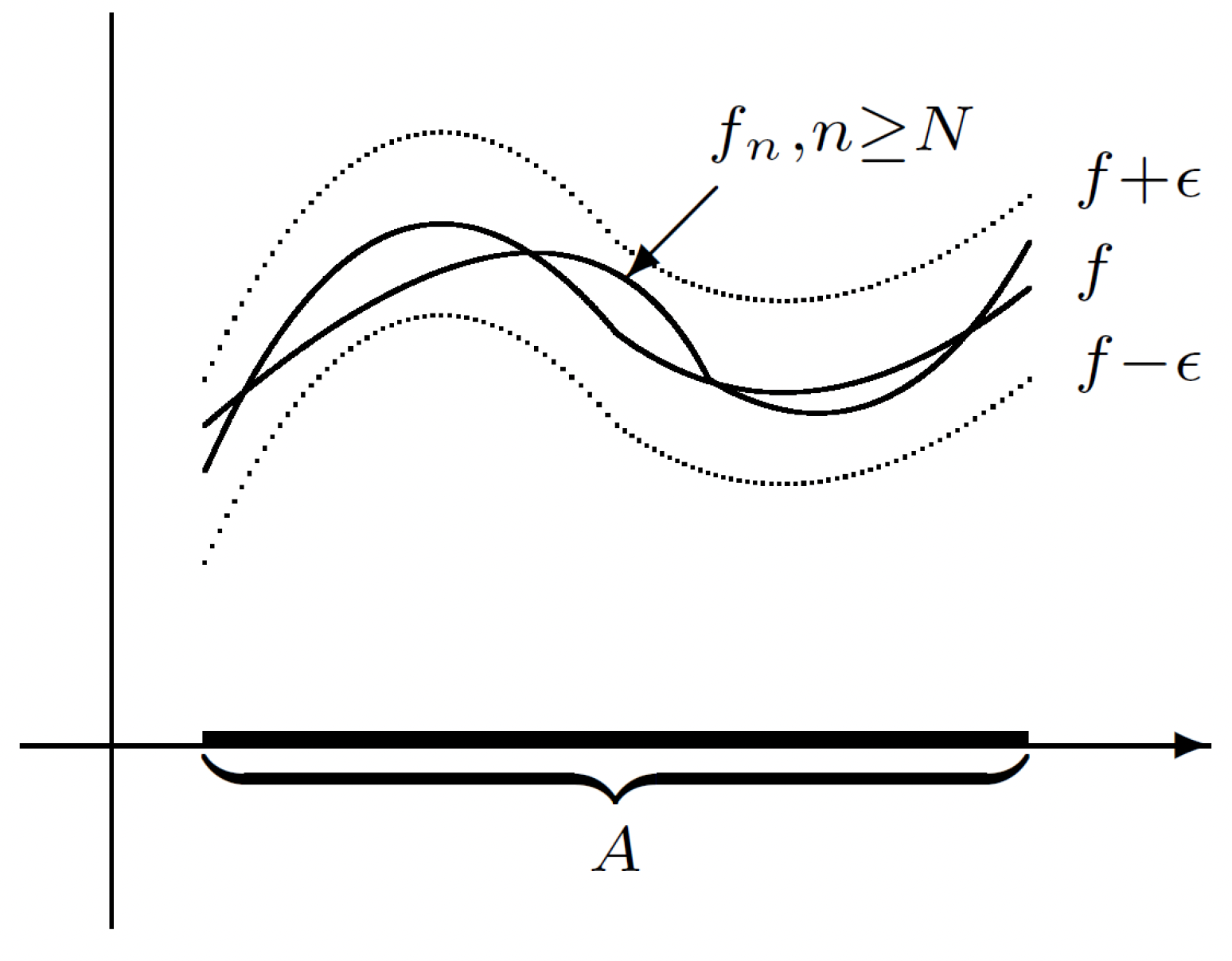

Illustration of uniform and pointwise convergence: Consider the following picture:

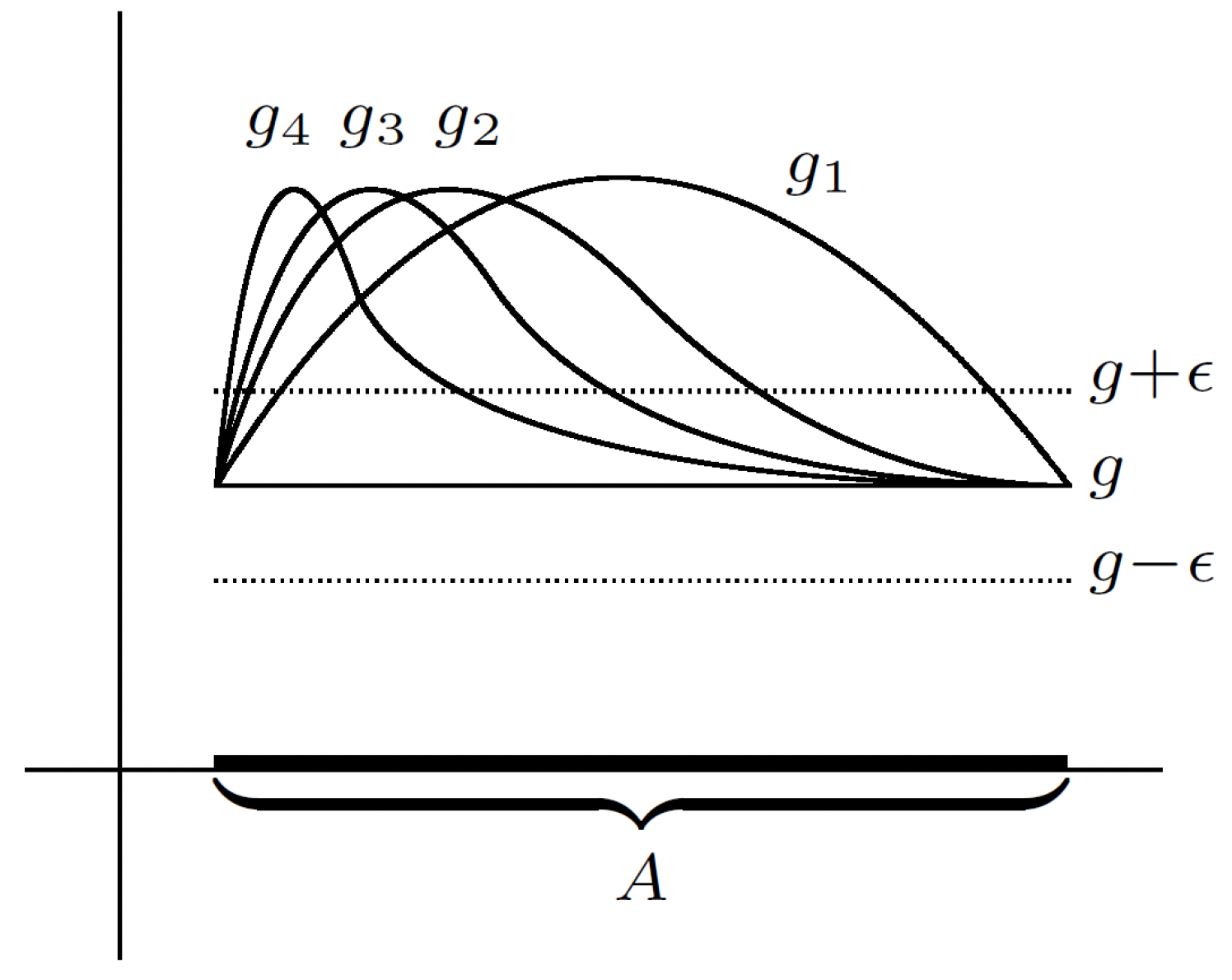

Above we have uniformly on . Now consider the following picture:

Above we now have pointwise but not uniformly. The difference(s) should be fairly clear.

-

Returning to Su lecture (concerning pointwise convergence): Fix , and ask yourself whether or not evaluated at a particular point converges? If it does, then we say that converges pointwise, and we write the so-called pointwise limit as . (Note this is a sequence of numbers since we are evaluating the functions at a point.)

-

Example of pointwise convergent function: Consider the following sequence of functions: . What is the pointwise limit of this sequence? Does this converge pointwise? Yes. To the zero function. So we can write .

-

Another example: Consider the sequence on . Does this converge pointwise? We see that , , , etc. Does this converge pointwise? We'd have something that looks like the following for the first few functions:

So the stays at 1 for and goes to everywhere else on only. Interesting. So

-

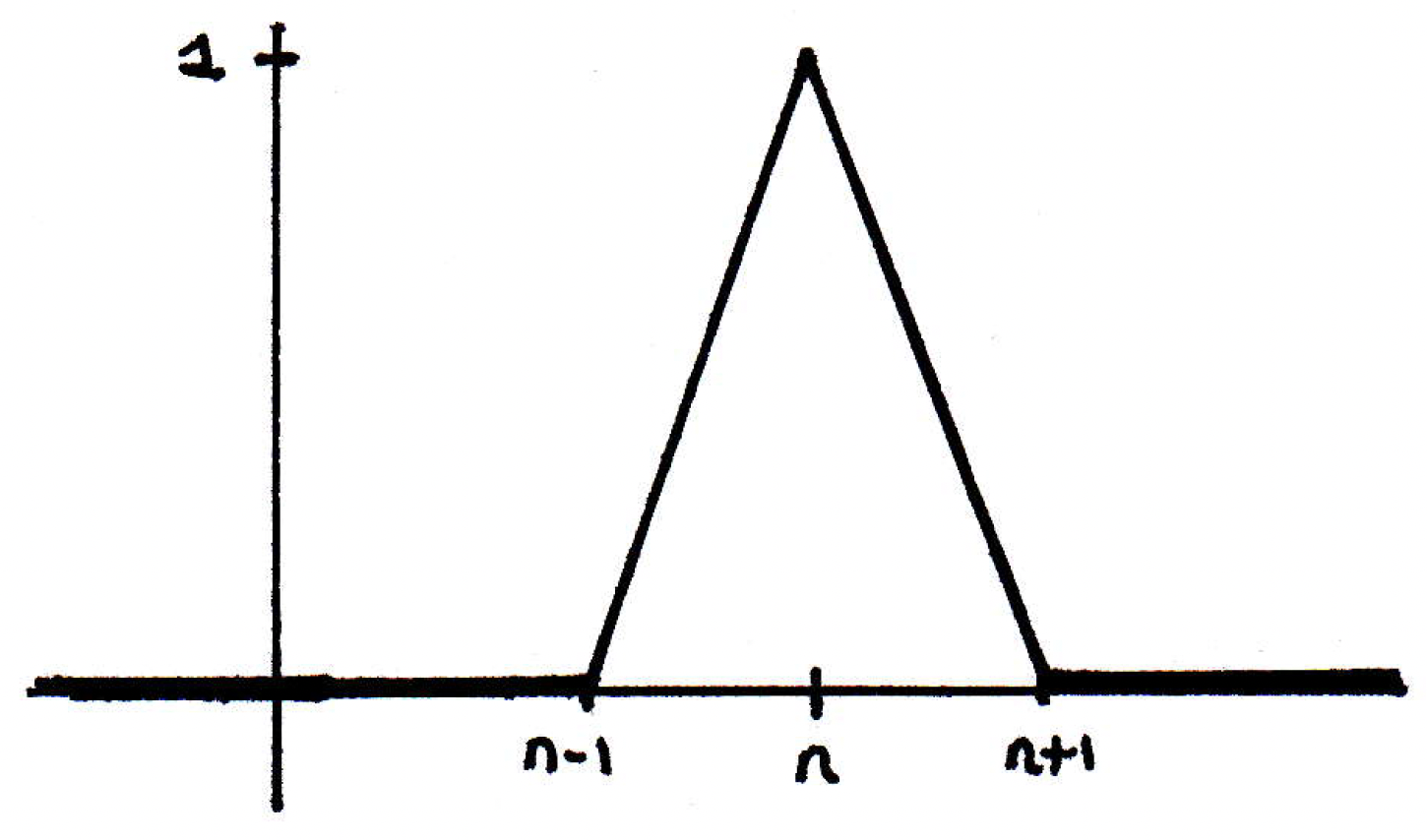

Another example: Consider the following function:

So this function has a spike at , and it lives between the next two integers, and . So would have a spike at , would have a spike at , etc. We would have . Fascinating. This is an interesting notion of convergence. Is it the best notion is it the right notion?

-

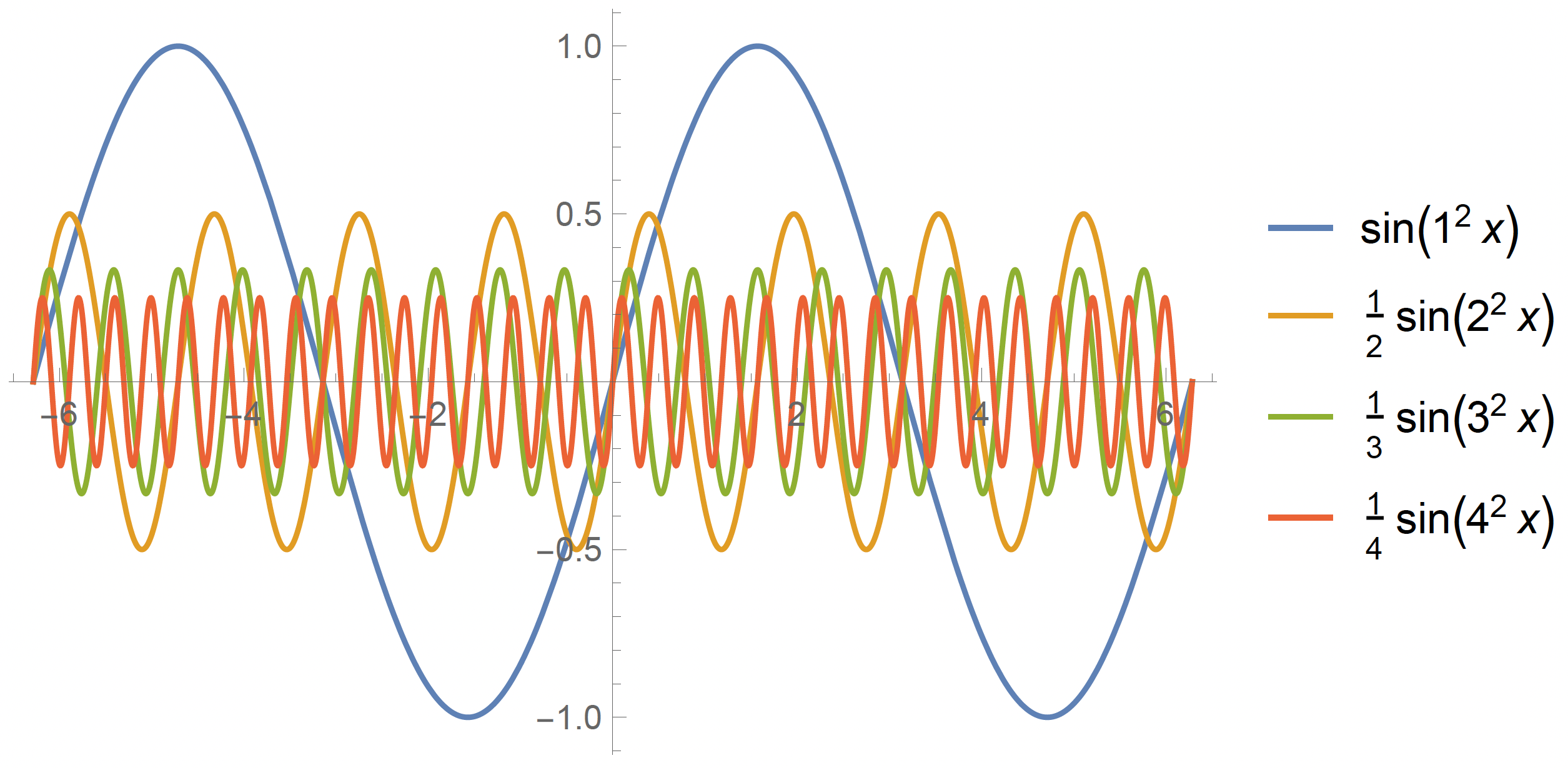

Another example: How about ? We'd have something that looked like the following for the first few terms of the sequence:

What does this converge to, if anything? It turns out that

Don't be fooled! Not all sequences of functions converge to 0.

-

Reflection: What can we learn from the examples above? They exhibit some very interesting behavior. Here's the kind of question we've been dealing with when you have sequences: What properties are preserved by the limiting operation for functions? What properties are preserved by pointwise limits? For example, is it true that the pointwise limit of a continuous function is continuous? No. Why? We saw this debunked with the example where we considered on .

What about derivatives? If we take the derivative of a limit is it the limit of the derivatives? And a very good example of this is in physics where you have an infinite sum, and you're asked to take the derivative of an infinite sum and you do it term by term. Isn't an infinite sum like a limit? A limit of partial sums? So are derivatives preserved by limits? No. In fact, with we see the derivatives are starting to behave very wildly. And the derivative of the limit is 0. So this is not true in general.

What about integrals? So think about areas. Are they preserved by pointwise limits? No. In fact, the example with the spike is a counterexample to this idea.

Right now things do not look very nice. Indeed, some of the most desirable properties, namely continuity, differentiability, and integrability, are not preserved with pointwise limits. So we need something stronger to help us. Maybe it will help some of our issues here. Maybe not all of them but some. (Much of this is discussed in the next analysis course.) Let's look at . So the of the spike function would be what? It would be 1. What about the of over the entire domain? Yeah, infinite. We don't like that. If we restricted the domain, then the function would have a supremum. Let's make a definition of something we can get at that may be of some use.

-

Uniform convergence: We should first diligently note that uniform convergence and uniform continuity are completely different concepts. But the word "uniform" is supposed to make us think of what? Everybody's wearing the same uniform. So what is behaving uniformly here?

We write on (i.e., converges uniformly to on ) if for all there exists such that implies .

How is this like or unlike the notion of convergence that we have previously encountered? What's uniform about the idea? What's different from this definition compared to that of pointwise limits, which also asks you to take a limit of a sequence? The idea here, because we are looking at the supremum of a difference, and we are demanding that that be less than , we're going to have "ribbon convergence." You can drawn an -ribbon about and eventually stays within the ribbon. What's uniform is that the same works for every .

-

Dealing with uniform convergence: Would we say is uniformly continuous? No, these function are not all living in the -ribbon eventually. In fact, every single function eventually leaves the ribbon. What about the sequence function for ? Does this converge uniformly? No, you'll still have problems no matter how far you go, where the functions do not lie completely inside the ribbon. What about the spiky function? Also not uniformly continuous. (Choose .) What about the sequence ? This does converge uniformly. So not all of our problems will be solved with ribbon or uniform convergence. But some of them will be hopefully.

-

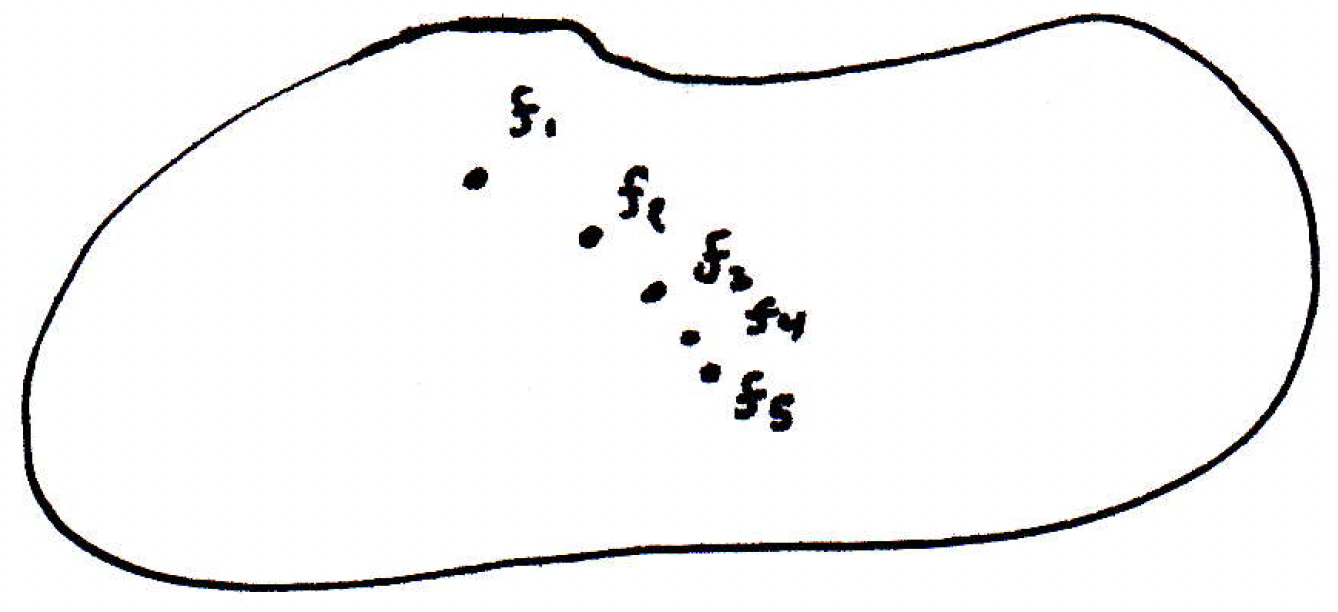

Note about metric spaces: What we've done is placed all of the functions in their own metric space, and by defining to be the distance on this space, we have our distance function (i.e., the supremum distance) for our metric space. The concept of convergence just discussed is the "usual convergence" in the metric space , where represents the continuous bounded functions on . So really we're just letting . So what's so great about this metric space? So we are thinking about every function as a point in its own space:

So uniform convergence is just like regular convergence in this space. So if we have a sequence of functions, must the sequence converge? Not necessarily. Well, what would we like to know about the space above in order for a sequence to converge? Is it complete? Here's a big fact from the next course in analysis: The space of functions is complete. So, in fact, we have a Cauchy criterion. This will allow us to say, without knowing what the limit is, whether or not a limit exists. Let's just see if the functions form a Cauchy sequence. That's a theorem.

-

Theorem about convergence of sequences of functions: We have if and only if for every there exists such that for all and for all , we have that . (Note the replacement of double norm signs. We're saying the same thing.) This is the Cauchy criterion for uniform convergence.

-

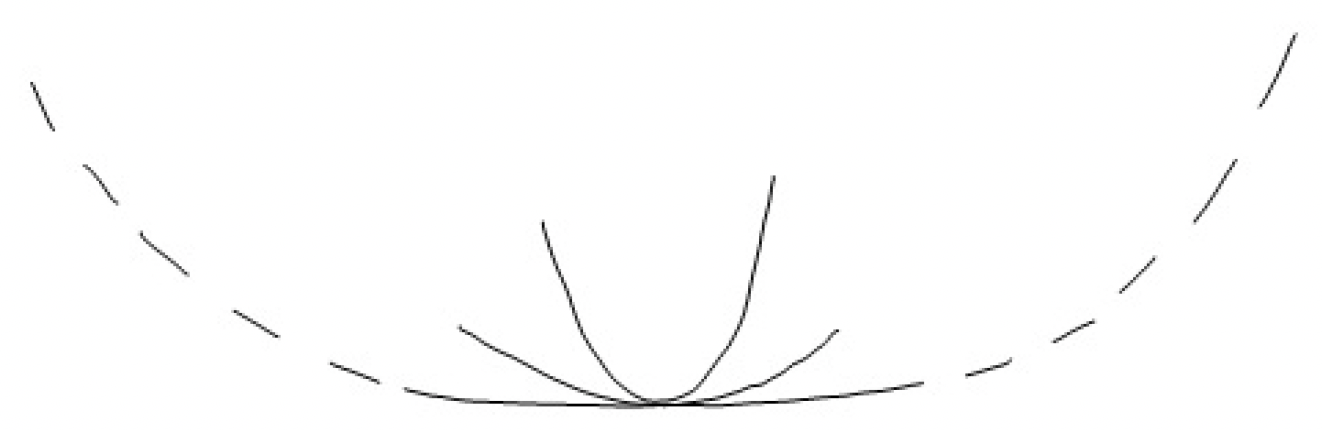

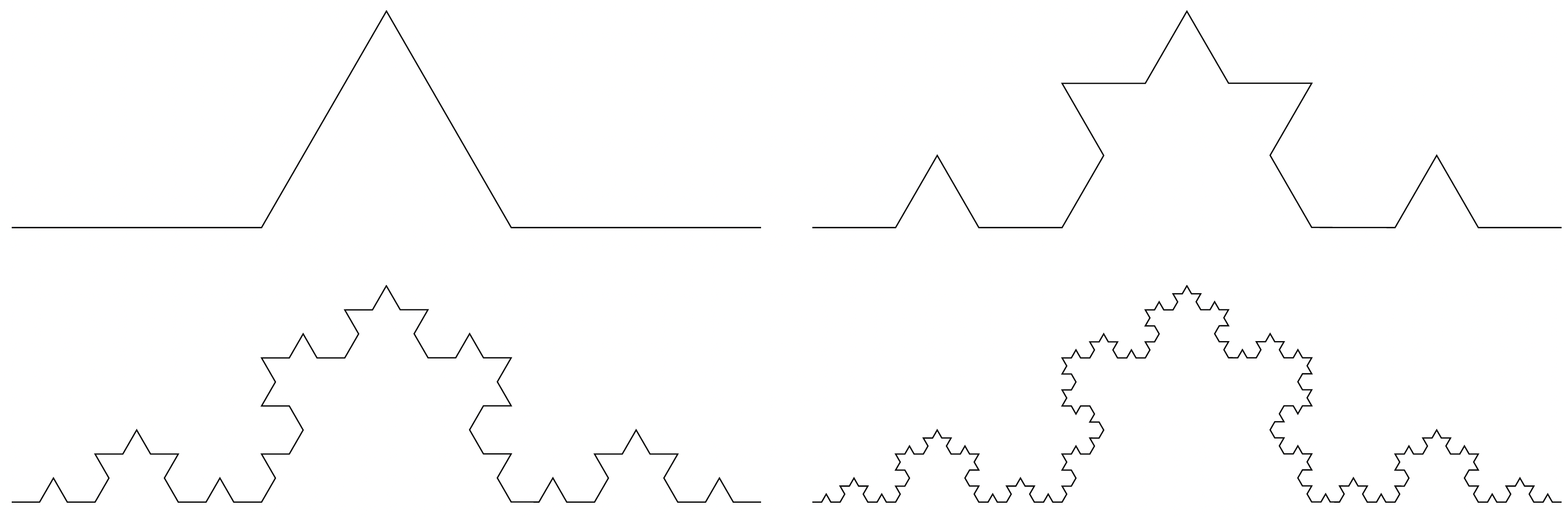

Example (Koch snowflake): Consider , where , , , and looks as follows (top left to bottom right, respectively):

This is the construction of the Koch snowflake. It's a fractal-like construction. But what do people say the snowflake is? Well, it's the one you get by taking the limit of these functions. But how do we know the limit exists? How do we know the sequence is Cauchy? Successive distances are smaller and smaller. In fact, past some point, for a fixed , all of the terms will be close (or simply ). Essentially, by construction, we see that is Cauchy. (We could be very careful in defining the function to ensure proper conditions are met.) So it converges. So we know the fractal limit exists. Of course, now the question is what is the limit? Here's a theorem.

-

Theorem: If , with all continuous, then is continuous.

Is this true in general for pointwise convergence? No. But it is true for uniform convergence. The ribbon kind of convergence helps us. It means that the limit is, in fact, continuous. What does that mean for the snowflake curve? The limiting function is continuous because of this theorem. So the Kock snowflake is continuous. Let's prove this.

-

Theorem proof: Here's the idea: Consider the operative bound

where we let , , and for ease of reference. So our operative bound becomes

Fix . For all , choose so . So . What's the second choice? Then is continuous. Thus, there exists such that if then . So for every , we found a such that

as desired. We will have one last application.

-

Theorem: There exists a function that is space-filling. That is, we claim there is a function from the interval into the "box" that completely fills out the entire box. It hits every point. How is that possible? We have a compact interval , and we're saying it will fill out the whole thing? Well, it's actually quite easy to show using the above theorem because we'll just construct the space-filling curve as a limit of a sequence of functions that is uniformly convergent. And we'll do this by making it Cauchy.

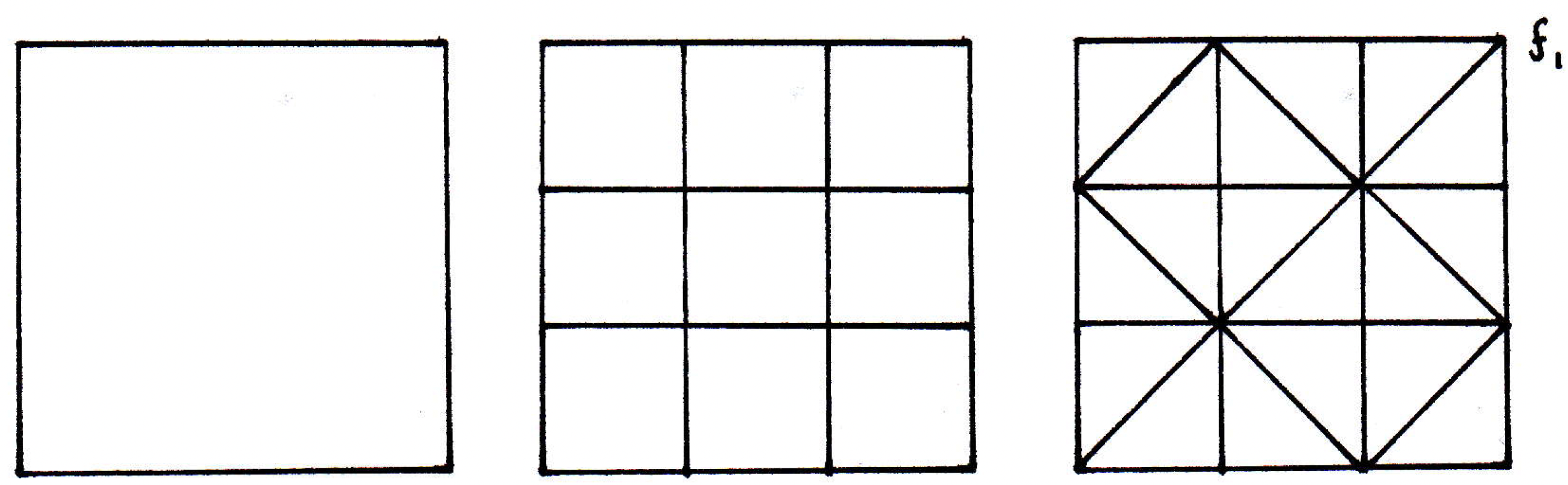

The basic idea is to start with the box, divide it up into thirds, and then wind your way through:

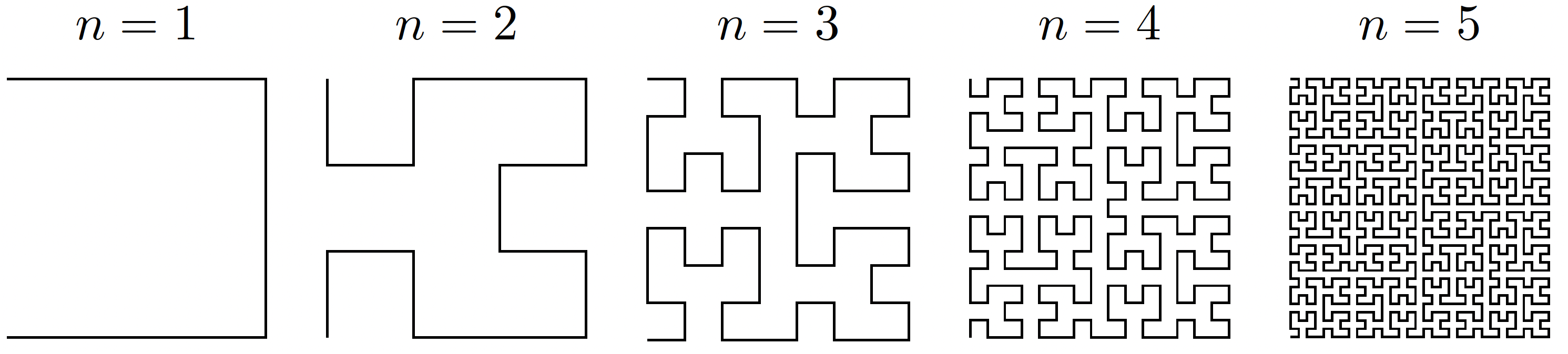

There are many ways to do this. The above is just an illustration of how to get started with one approach. Given the we constructed, what will look like? The basic idea is to now cut each of the nine little squares into tic-tac-toe grids themselves (so now you would have 81 little squares in total) and replace each of those lines with the construction of the far right figure above. And keep doing this. Doing so will fill the box. Why is this converging uniformly? Well, you can convince yourself that everything stays within a little box (all the images of particular points for a fixed time stay within a box) past some point so it converges uniformly. It converges in a Cauchy sense. So it must converge to a limit, and you can show that every point in the box is actually the pointwise limit of some point. So it fills out all of the space. So there exists a space-filling curve. Another space-filling curve is the Hilbert curve which looks like the following for , , , , :

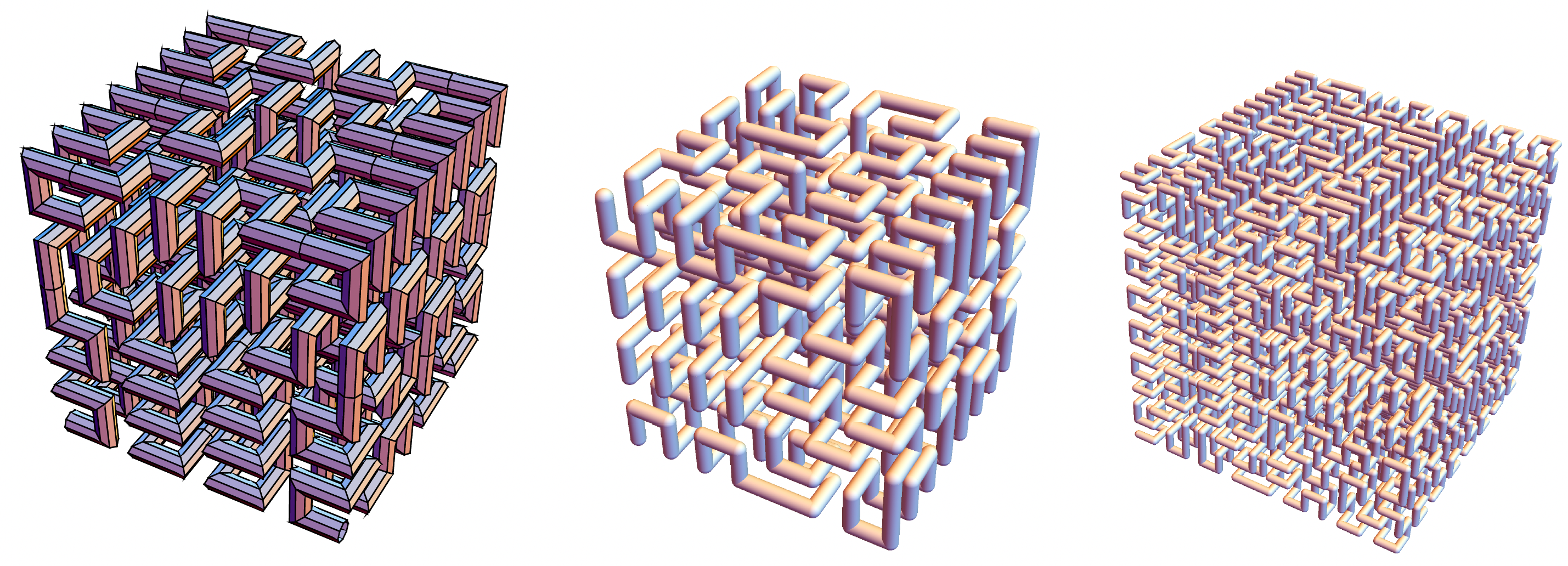

We can actually construct these space-filling curves in if we like:

We could actually construct these space-filling curves in as well.